A bank executive opens a fraud dashboard in Microsoft Power BI.

Losses by region, chargeback ratios, transaction velocity trends and a heatmap of anomalous activity. The numbers refresh within minutes. Data flows out of the system of record, is reshaped and aggregated, then presented for interpretation.

This is contemporary analytics: fast and operationally impressive. But it remains interpretive. It explains what is happening, while intervention occurs elsewhere – inside a fraud model embedded in the execution path, deciding in milliseconds whether money moves or an account is frozen.

Reporting systems describe what has already occurred. Even when refreshed every few minutes, they are retrospective. Inference systems are anticipatory. They evaluate the present in order to shape what happens next.

For two decades, enterprise data platforms were built around a deliberate separation between systems of record and analytical platforms. The system of record handled revenue-generating transactions; analytics operated on copies refreshed hourly or even every few minutes. Latency narrowed, but the boundary remained.

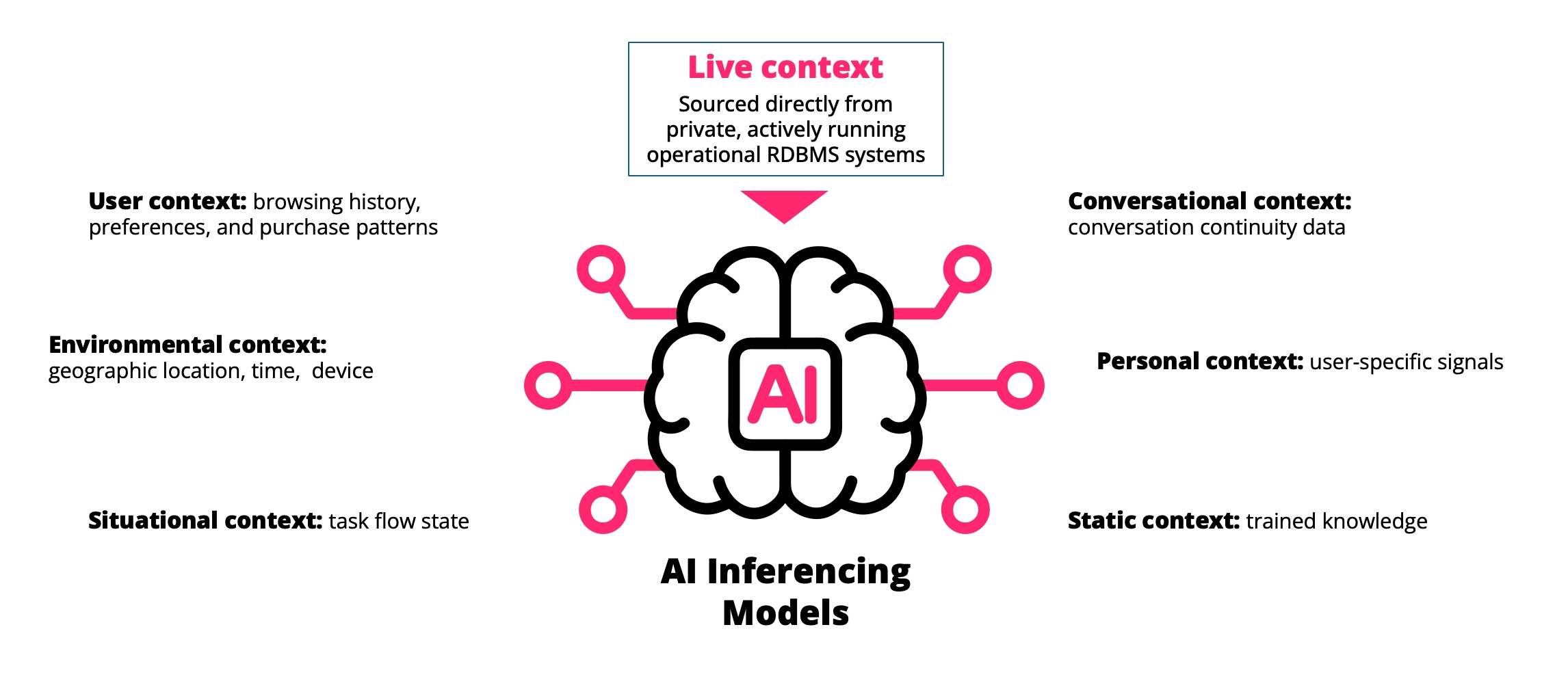

AI systems do not consume summaries, however fresh. They make decisions inside the transaction itself. A real-time fraud model does not want a recently refreshed extract; it requires the authoritative state of the business at the moment of decision. When automation replaces interpretation, data freshness becomes a decision integrity requirement. That shift changes the role of the database entirely.

Snapshots ≠ State

The difference is not batch versus real time. It is snapshot versus canonical state.

A snapshot is a materialised representation of state at a prior point in time – even if that point is only moments earlier. It may be refreshed frequently or continuously streamed, but it remains a copy. The system of record contains the canonical state of the enterprise – balances, limits, flags and relationships – reflecting the legal and financial truth when a transaction commits.

In fraud detection, that distinction is decisive. A dashboard can tolerate slight delay because its purpose is explanation. A model embedded in the execution path cannot. It must evaluate current balance, velocity and account status, not a recently materialised representation.

For years, we increased the distance between analytics and the system of record to protect transactional stability. That separation reduced risk in a world where insight followed action.

Automation reverses that order. Insight now precedes action. Once decisions are automated, the gap between a copy of the data and the authoritative source becomes consequential.

When Almost Right Is Still Wrong

If a fraud dashboard is slightly stale, an analyst may adjust a threshold next week. When a fraud model evaluates incomplete or delayed state, the error is executed immediately and repeated at scale.

False declines can lock customers out within minutes. False approvals can leak substantial losses before discrepancies surface in reporting. Automation compresses time and amplifies mistakes because there is no interpretive buffer.

Real-time intervention is inevitable. Competitive pressure and regulatory scrutiny demand it. But once decisions are automated, tolerance for architectural distance shrinks. A delay harmless in reporting can be material in a decision stream. A dataset “close enough” for analytics may be insufficient for automated intervention.

The risk is not that dashboards are wrong; it is that forward-looking systems may act on something almost right.

Databases in the Age of Intervention

When fraud detection becomes automated intervention rather than retrospective analysis, the requirements on the data platform change. Freshness is defined at the moment of decision, not by refresh intervals.

Replication patterns take on new significance. Asynchronous copies and downstream materialisations were designed to protect the system of record. They optimise scale and isolation, but every layer introduces potential lag or divergence. For reporting, that trade-off is acceptable. For automated decisions in revenue-generating workflows, it becomes risk.

Workload separation also looks different. When analytics is retrospective, distance protects performance. When inference is embedded in operational workflows, proximity to live transactional state matters. The challenge is enabling safe, predictable access without compromising correctness.

Fraud detection is simply the clearest example. Dynamic pricing, credit approvals, supply chain routing and clinical triage all follow the same pattern. The model is not generating a report about what happened; it is evaluating the present to influence what happens next.

For decades, enterprise architecture assumed intelligence followed events. As AI systems become anticipatory and automated, intelligence precedes action. The database is no longer simply the foundation of record-keeping.

It becomes part of how the future is decided – whether we are comfortable with that or not.