In the previous post, I looked at Gartner’s recent assertion that 75% of databases will be deployed to the cloud by 2022 – and that the cloud is now the default platform for managing data.

The massive shift to the public cloud has a lot of implications, many of which have been written about at length over the last few years. But one question I don’t think has been asked enough is: what does this mean for the poor, beleaguered database administrator? Let’s start with a look at the journey DBAs have been since “the old days”.

DBA 1.0: The (Good) Old Days

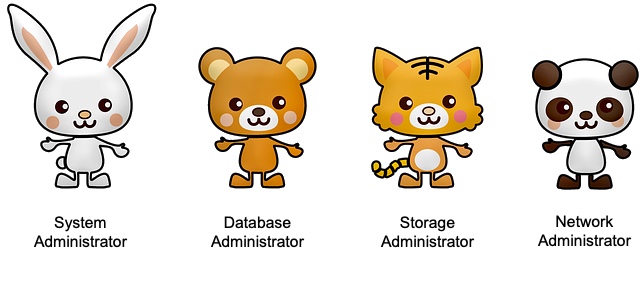

Data centres used to contain four distinct tribes of beings living in semi-peaceful co-existence: SysAdmins, DBAs, Network Admins and Storage Admins:  Four groups of specialists, each with a distinct skillset and a fairly delineated boundary of responsibility. I say four, it was really three – as everybody who remembers this era will attest, Network Admins were actually mythical creatures who never inhabited their desks; historical evidence now suggests that they were actually just a simple script which automatically closed any ticket with the phrase “No problems were found with the network”.

Four groups of specialists, each with a distinct skillset and a fairly delineated boundary of responsibility. I say four, it was really three – as everybody who remembers this era will attest, Network Admins were actually mythical creatures who never inhabited their desks; historical evidence now suggests that they were actually just a simple script which automatically closed any ticket with the phrase “No problems were found with the network”.

The database administrator occupied a unique position in this family, because they lived further up in the application stack and so dealt with developers and application owners, business users and sometimes – whisper it – those wondrous beings, the “end users”. Conveniently, this made the DBA the perfect person to blame for almost any problem at any layer in the stack. Application slow? Must be a database problem. Query taking too long? MUST be a database problem. Never mind that the database server doesn’t have enough memory the developers have no concept of how to code in SQL and the storage system is a RAID5 bag of spanners running on spinning rust… it’s always a database problem. And we know it’s not a networking problem because it says here that “No problems were found with the network”.

One outcome of this “unique” position was that many DBAs had to learn skills outside of their core profession (networking, Linux or Windows admin skills, SQL tuning, PL/SQL decoding, hostage negotiation etc). I’d love to say this thirst for knowledge was due to professional pride, but the best DBAs I ever met simply learned these skills so they could prove they weren’t in the wrong and thus get an easier life. “Oh you think your SQL runs slow because of my database huh? Well if you rewrote it like this, it runs in 10% of the time and doesn’t make all the lights go dim in the data centre, you imbecile…”

One outcome of this “unique” position was that many DBAs had to learn skills outside of their core profession (networking, Linux or Windows admin skills, SQL tuning, PL/SQL decoding, hostage negotiation etc). I’d love to say this thirst for knowledge was due to professional pride, but the best DBAs I ever met simply learned these skills so they could prove they weren’t in the wrong and thus get an easier life. “Oh you think your SQL runs slow because of my database huh? Well if you rewrote it like this, it runs in 10% of the time and doesn’t make all the lights go dim in the data centre, you imbecile…”

DBA 2.0: The IT Generalist

As the data centre evolved and new technologies such as Virtualization, NoSQL, Hadoop and the Cloud became prevalent, the clearly defined roles of yesteryear started to become blurred. In the last decade, we saw the rise of a new creature in the data centre: The IT Generalist. Of course, this is mainly just another way of saying DBA with Extra Responsibilities (but no extra pay). It is now commonplace for DBAs to be managing a multitude of different technologies outside of the traditional RDBMS: many DBAs are managing, at least at some level, VMware clusters or other virtualization platforms; I know DBAs who have had tangles with firewalls and software-defined networking… I have even met a large number of DBAs who admin their All-Flash storage arrays (simpler than the old fashioned disk array, after all).

As a side note, anyone with the job title of “Oracle DBA” also found themselves lumbered with managing any technology which was Oracle-badged – and that’s a lot of stuff. Fusion Middleware, Oracle Linux, Weblogic, Oracle ZFS Appliance, anything running under Automatic Storage Management, even Java! The list goes on… how long before somebody gets a ticket because Tik Tok isn’t working properly?

Larry Ellison might have famously said he wants to get rid of the DBA, but the reality is that the DBA role has just become even more wide-ranging.

DBA 3.0: The Cloud DevOps DBA

Fast forward to 2020, the DBA is now managing applications running on databases which run in containers on virtual machines in the cloud, probably deployed via some sort of infrastructure-as-code implementation. Hey, the dream of the modern IT organisation is to achieve some utopian level of automation – and it’s the DBA who has the most practice of automating cross-function tasks; they’ve been trying to do it for years just for an easier life. (Note how the dream of “an easier life” motivates so much of DBA behaviour!)

Of course, everything is now DevOps too… right? If you aren’t DevOps, you aren’t in the gang. Remember when everything had to be agile? But, when you scratched the surface, “agile” was just a way of saying “we haven’t documented any of this”. Well, DevOps has taken over from agile as the buzz word of choice. And the literal translation of “DevOps” is “we still didn’t document anything but also we aren’t going to follow any kind of change control procedures or put any of these code releases through anything more than the most primitive of testing routines, so good luck”.

But in this long evolutionary journey, there is one thing that DBAs have never been exposed to … until today. Cost. As a DBA, you may have had to argue for more powerful servers, faster CPUs, more database processor licenses, cost options (“I need the Tuning Pack, damnit!”), but the cloud is a different ball game. A DBA building a database in the public cloud is making decisions which have a direct affect on the (quite possibly massive) monthly bill from AWS / Azure / GCP / Oracle Cloud / other vendor of choice. This is what I wanted to look at in this post before I got massively carried away.

DBAs of the World, Unite!

I’ll be honest, I didn’t intend this post to become some sort of DBA Manifesto, but once I started typing I couldn’t stop. Blogging is like that sometimes. In the next post, we’ll delve a bit deeper into the future of DBAs and angle on the cloud costs. In the meantime, let’s summarise:

Everybody knows that the DBA is the humble, hard-working hero of Enterprise IT: dedicated and underpaid, overburdened and undertrained, blamed for everything and thanked for nothing… the DBA really is the Morlock of the data centre, working long nights and hard weekends to keep all those wonderful, spoilt Eloi end users happy*. If you are a DBA, give yourself a pat on the back for surviving this evolutionary journey. If you’re a SysAdmin, be honest: you guys need to buy your DBAs a drink now and then. And if you are a Network Admin: stick to the script.

* If the Morlock and Eloi references aren’t working for you, read this.