I was invited to Microsoft HQ in the UK yesterday to be a speaker at one of their launch event for SQL Server 2012. It’s the second of these events that I’ve appeared at and it finally made me realise I need to change something about this blog.

So far until now I have resisted making any critical remarks about the Oracle Exadata product here, other than to quote the facts as part of my History of Exadata series. I’m going to change that now by offering my own opinions on the product and Oracle’s strategy around selling it.

Before I do that I should establish my credentials and declare any bias I may have. For a number of years, until very recently in fact, I was an employee of Oracle Corporation in the UK where I worked in Advanced Customer Services. I began working with Exadata upon the release of the “v2” Sun Oracle Database Machine and at the time of the “X2” I was the UK Team Lead for Exadata. I personally installed and supported Exadata machines in the UK and also trained a number of the current Exadata engineers in ACS (although that wasn’t exactly difficult as all of the ACS engineers I know are excellent). I also used to train the sales and delivery management communities on Exadata using my trademarked “coloured balls” presentation (you had to be there).

I now work for Violin Memory, a company that (to a degree) competes with Oracle Exadata. Exadata is a database appliance, whilst Violin Memory make flash memory arrays… so that doesn’t immediately sound like a true competition. But I’ll let you into a little secret: Exadata isn’t a database appliance at all – it’s an application acceleration product. That’s what is does, it takes applications which businesses rely on and makes them run faster. And in fact that’s also exactly what Violin is – it’s an application acceleration product that just happens to look like a storage array.

So now we have everything out in the open I’m going to talk about my issue with Exadata – and you can read this keeping in mind that everything I say is tainted by the fact that I have an interest in making Violin products look better than Oracle’s. I can’t help that, I’m not going to quit my exciting new job just to gain some journalistic integrity…

There are a number of critiques of Exadata out there on the web, ranging from technical discussions (the best of which are Kevin Closson’s Critical Analysis videos) to stories about the endless #PatchMadness from Exadata DBAs on Twitter. My main issue is much more fundamental:

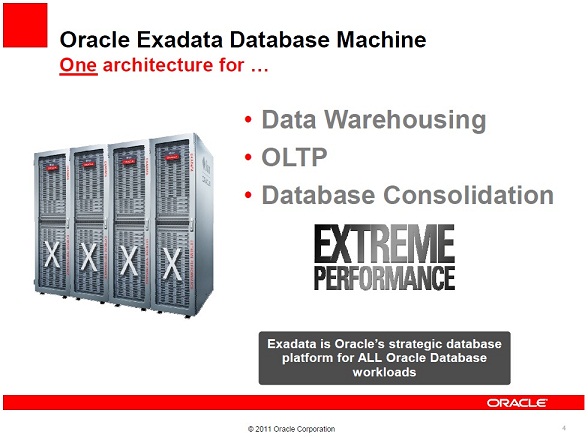

Oracle now say that Exadata is the strategic database platform for ALL database workloads. This did not used to be the case. If you read my History of Exadata piece you will see that when the original v1 HP Oracle Database Machine was released, “Exadata” was the name of the storage servers. And those storage servers were, in Oracle’s own words, “Designed for Oracle Data Warehouses“.

Upon the release of the v2 Sun Oracle Database Machine there came an epiphany at Oracle: the realisation that flash technology was essential for performance (don’t forget I’m biased). This was great news for Violin as back in those days (this was 2009) flash was still an emerging technology. However, the Sun F20 Accelerator cards that were added to the v2 were (in my biased opinion) pretty old tech and Oracle was only able to use them as a read cache. However, that didn’t stop Oracle’s marketing department (never one to hold back on a bold claim) from making the statement that the v2 was “The First Database Machine For OLTP“. We are now on the X2 model (really only a minor upgrade in CPU and RAM from the v2) and Oracle has now added Database Consolidation to the list of things that Exadata does. And of course the new bold claim has now appeared, as in the image above, “Exadata is Oracle’s strategic database platform for ALL database workloads“. Sure the X2 now came in two models, the X2-2 and the X2-8, but they weren’t actually different in terms of the features that you get above a normal Oracle database… you still get the same Exadata storage, Hybrid Columnar Compression and Exadata Flash Cache features regardless of the model.

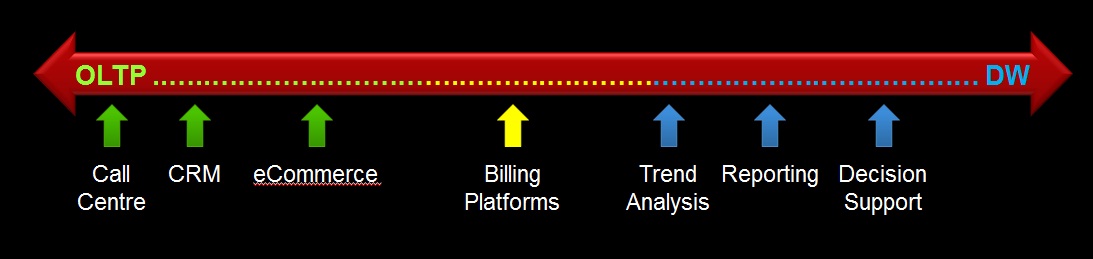

So what’s my problem with this? Well first of all let’s just think about what a workload is. Essentially you can define the workload of a database by the behaviour of its users. There are two main types of workload in the database world, OnLine Transactional Processing (OLTP) and Data Warehousing (DW). OLTP systems tend to have highly transactional workloads, with many users concurrently querying and changing small amounts of data. Conversely, DW systems tend to have a smaller number of power users who query vast amounts of data performing sorts and aggregation. OLTP systems experience huge amounts of change throughout their working period (e.g. 9am-5pm for a national system, 24×7 for a global system). DW systems on the other hand tend to remain relatively static except during ETL windows when massive amounts of data are loaded or changed.

In fact, you can pretty much picture any workload as fitting somewhere on a scale between these two extremes:

Of course, this is a sweeping generalisation. In practice no system is purely OLTP or purely DW. Some systems have windows during which different types of workload occur. Consolidation systems make things even more complicated because you can have multiple concurrent workloads taking place.

There’s a point to all this though. Take a random selection of real life databases and look at their workloads. If you agree with my OLTP <> DW scale above then you will see that they all fit in different places. Maybe you don’t agree with it though and you think there are actually many more dimensions to consider… no matter. What we should all be able to agree on is this:

In the real world, different databases have different workloads.

And if we can agree on that then perhaps we can also agree on this:

Different workloads will have different requirements.

That’s simple logic. And to extend that simple logic just one more step:

One design cannot possibly be optimal for many different requirements.

And that’s my problem with Oracle’s strategy around selling Exadata. We all know that it was originally designed as a data warehouse solution. Although I defer to Kevin’s knowledge about the drawbacks of an asymmetric shared-nothing MPP design, I always thought that Exadata was an excellent DW product and something that (at the time) seemed like an evolutionary step forward (although I now believe that flash memory arrays are a revolutionary step forward that make that evolution obsolete – keep remembering that I’m biased though). But it simply cannot be the best solution for everything because that doesn’t make sense. You don’t need to be technical to get that, you don’t even need to be in IT.

Let’s say I wanted to drive from town A to town B as fast as I can. I’d choose a Ferrari right? That’s my OLTP requirement. Now let’s say I wanted to tow a caravan from A to B, I’d need a 4×4 or something with serious towing ability – definitely not a Ferrari. There’s my DW requirement. Now I need to transport 100 people from A to B. I guess I’d need a coach. That’s my Database Consolidation requirement. There is no single solution which is optimal for all requirements. Only a set of solutions which are better at some and worse at others.

A final note on this subject. The Microsoft event at which I spoke was about Redmond’s new set of database appliances: the Database Consolidation Appliance, the Parallel Data Warehouse, and the Business Decision Appliance. Microsoft have been lagging behind Oracle in the world of appliances but I believe that they have made a wise choice here in offering multiple solutions based on customer workload. And they are not the only ones to think this. Look at this document from Bloor comparing IBM and Exadata:

“Oracle’s view of these two sets of requirements is that a single solution, Oracle Exadata, is ideal to cover both of them; even though, in our view (and we don’t think Oracle would disagree), the demands of the two environments are very different. IBM’s attitude, by way of contrast, is that you need a different focus for each of these areas and thus it offers the IBM pureScale Application System for OLTP environments and IBM Smart Analytics Systems for data warehousing.”

Now… no matter how biased you think I am… Maybe it’s time to consider if this strategy of Oracle’s really makes sense?