This cookbook is part of the Advanced Cookbooks series, for installing Oracle on Violin using a 4k-based ASM diskgroup. If you are unsure what that means, do not proceed any further until you have read this. Configuring ASM to use 4k sectors is interesting, but not necessary – you can achieve excellent performance by following one of the Simple Cookbooks with the added benefit that your installations will take a lot less time…

This cookbook is part of the Advanced Cookbooks series, for installing Oracle on Violin using a 4k-based ASM diskgroup. If you are unsure what that means, do not proceed any further until you have read this. Configuring ASM to use 4k sectors is interesting, but not necessary – you can achieve excellent performance by following one of the Simple Cookbooks with the added benefit that your installations will take a lot less time…

So it’s time to create an Oracle 11gR2 database on a Violin Memory flash storage array. I have a Linux system with the Oracle Linux 5 Update 7 operating system installed, which by default runs Oracle’s Unbreakable Enterprise Kernel (based on the 2.6.32 kernel):

[root@oel57 ~]# cat /etc/oracle-release Oracle Linux Server release 5.7 [root@oel57 ~]# uname -s -n -r -m Linux oel57 2.6.32-200.13.1.el5uek x86_64

I have already disabled selinux and turned off the iptables firewall. I also configured NTP in order to avoid Oracle complaining during the installation process.

Setup YUM and Install Oracle-Validated

The easiest way to configure a RHEL or OL system for use with an Oracle database is always to use the oracle-validated RPM, so I’ll setup yum and install it:

[root@oel57 ~]# cd /etc/yum.repos.d [root@oel57 yum.repos.d]# wget http://public-yum.oracle.com/public-yum-el5.repo

I need to edit the repo file to set enabled=1 for the correct channel [ol5_u7_base] and then I can install the oracle-validated RPM using yum:

[root@oel57 yum.repos.d]# yum install oracle-validated -y Loaded plugins: rhnplugin, security This system is not registered with ULN. ULN support will be disabled. ol5_u7_base | 1.1 kB 00:00 Setting up Install Process Resolving Dependencies --> Running transaction check ---> Package oracle-validated.x86_64 0:1.1.0-14.el5 set to be updated ...

On and on it goes until everything is setup. It will automatically configure my sysctl.conf file for 11g and even create me an oracle user and the oinstall and dba groups.

[Incidentally, if you stumbled upon this page because you are searching on the phrase “This system is not registered with …” then may I divert your attention here.]

Install Device Mapper and Multipathing Daemon

I can use yum to check that the device mapper and multipathing daemon are installed, then enable the multipathing daemon to run at startup with the chkconfig command:

[root@oel57 ~]# yum list device-mapper device-mapper-multipath Loaded plugins: rhnplugin, security This system is not registered with ULN. ULN support will be disabled. Installed Packages device-mapper.i386 1.02.63-4.el5 installed device-mapper.x86_64 1.02.63-4.el5 installed device-mapper-multipath.x86_64 0.4.9-23.0.9.el5 installed [root@oel57 ~]# chkconfig --list multipathd multipathd 0:off 1:off 2:off 3:off 4:off 5:off 6:off [root@oel57 ~]# chkconfig multipathd on [root@oel57 ~]# chkconfig --list multipathd multipathd 0:off 1:off 2:on 3:on 4:on 5:on 6:off

I need to setup the multipath.conf file now:

[root@oel57 ~]# cp /usr/share/doc/device-mapper-multipath-0.4.9/multipath.conf /etc [root@oel57 ~]# vi /etc/multipath.conf

I’ll add a device section for my Violin Memory array:

# ALUA

devices {

device {

vendor "VIOLIN"

product "SAN ARRAY ALUA"

path_grouping_policy group_by_prio

getuid_callout "/sbin/scsi_id -p 0x80 -g -u -s /block/%n"

prio_callout "/sbin/mpath_prio_alua /dev/%n"

path_checker tur

path_selector "round-robin 0"

hardware_handler "1 alua"

failback immediate

rr_weight uniform

no_path_retry fail

rr_min_io 4

}

# Non-ALUA

device {

vendor "VIOLIN"

product "SAN ARRAY"

path_grouping_policy group_by_serial

getuid_callout "/sbin/scsi_id -p 0x80 -g -u -s /block/%n"

path_checker tur

path_selector "round-robin 0"

hardware_handler "0"

failback immediate

rr_weight uniform

no_path_retry fail

rr_min_io 4

}

}

And I’ll make sure the defaults section includes the user_friendly_names option:

blacklist {

devnode "*"

}

blacklist_exceptions {

devnode "sd*"

}

defaults {

user_friendly_names yes

}

Once this file is saved I need to load the multipathing modules into the kernel and then run the auto-configuration command.

[root@oel57 ~]# modprobe dm-multipath [root@oel57 ~]# modprobe dm-round-robin [root@oel57 ~]# multipath -v2 create: mpath1 (SVIOLIN_SAN_ARRAY_BAFEFD2A8C2E2817) undef VIOLIN,SAN ARRAY size=100G features='0' hwhandler='0' wp=undef `-+- policy='round-robin 0' prio=1 status=undef |- 8:0:0:1 sdb 8:16 undef ready running `- 6:0:0:1 sdc 8:32 undef ready running create: mpath2 (SVIOLIN_SAN_ARRAY_BAFEFD2ACE3C949E) undef VIOLIN,SAN ARRAY size=100G features='0' hwhandler='0' wp=undef `-+- policy='round-robin 0' prio=1 status=undef |- 8:0:0:2 sdd 8:48 undef ready running `- 6:0:0:2 sde 8:64 undef ready running create: mpath3 (SVIOLIN_SAN_ARRAY_BAFEFD2A9084A7A6) undef VIOLIN,SAN ARRAY size=100G features='0' hwhandler='0' wp=undef `-+- policy='round-robin 0' prio=1 status=undef |- 8:0:0:3 sdf 8:80 undef ready running `- 6:0:0:3 sdg 8:96 undef ready running create: mpath4 (SVIOLIN_SAN_ARRAY_BAFEFD2ABCD78D26) undef VIOLIN,SAN ARRAY size=100G features='0' hwhandler='0' wp=undef `-+- policy='round-robin 0' prio=1 status=undef |- 8:0:0:4 sdh 8:112 undef ready running `- 6:0:0:4 sdi 8:128 undef ready running ...output truncated...

I now need to go and create aliases for these new devices back in the multipath.conf file, but before that I need to make some changes in order to ensure I get the best performance from these devices when I start issuing I/O calls.

Setup UDEV Rules

Operating systems used an I/O Scheduler to determine the order in which I/O operations are submitted to storage. The default scheduler since the 2.6.18 Linux kernel has been Jens Axboe’s cfq (Completely Fair Queuing) scheduler – a clever set of algorithms which groups certain I/Os together to try and reduce the impact of disk seek time. Oracle, however, chooses to use the deadline scheduler as the default for the Unbreakable Enterprise Kernel – again written by Jens Axboe, this scheduler aims to ensure a guaranteed start service time for each I/O request. This is all well and good, but since my Violin Memory flash Memory Array has latency measured in microseconds so there is no benefit to be had from rearranging my I/Os – in fact if I use the most simple noop scheduler (also developed by Jens – it’s fair to say that he gets around) I will see a considerable improvement in performance.

To adjust which scheduler is used the name of the schedule can be echoed to the /sys/block/<device>/queue/scheduler file – and likewise this file can be read to see which scheduler is in use. Looking at the output from the multipath -v2 command above I can see that the device mpath1 actually corresponds to two SCSI block devices sdb and sdc, so let’s run this against sdb:

[root@oel57 ~]# cat /sys/block/sdb/queue/scheduler noop anticipatory [deadline] cfq [root@oel57 ~]# echo noop > /sys/block/sdb/queue/scheduler [root@oel57 ~]# cat /sys/block/sdb/queue/scheduler [noop] anticipatory deadline cfq

You can see that /dev/sdb is now using the noop scheduler – I would need to make the same change now to sdc and indeed all the other devices. However, not only is this time-consuming but the change will not be persistent across reboots, so to ensure that the noop scheduler is automatically used for all of the LUNs presented by the array I need to add a UDEV rule. I do this by creating a new UDEV rule file:

[root@oel57 ~]# vi /etc/udev/rules.d/60-vshare.rules

And adding the following text (all on one line):

KERNEL=="sd*[!0-9]|sg*", BUS=="scsi", SYSFS{vendor}=="VIOLIN", SYSFS{model}=="SAN ARRAY*", RUN+="/bin/sh -c 'echo noop > /sys/$devpath/queue/scheduler && echo 1024 > /sys/$devpath/queue/nr_requests'"

Note that I have also increased the queue size (“nr_requests”) from its default of 128. The benefit from this change is somewhat limited for flash storage (as Yoshinori Matsunobu’s blog demonstrates) but tests conducted in our lab have shown that there is some gain to be had from this small increase.

Now I need to reload the UDEV rules:

[root@oel57 ~]# udevcontrol reload_rules [root@oel57 ~]# udevtrigger

I can now check that the devices have picked up this change by querying the /sys pseudo-filesystem again:

[root@oel57 ~]# cat /sys/block/sdc/queue/scheduler [noop] anticipatory deadline cfq [root@oel57 ~]# cat /sys/block/sdc/queue/nr_requests 1024

Add Multipath Aliases

Now this next step is not technically necessary if you are going to use the ASMLib kernel driver. I can see from the output of the multipath -ll command (which I won’t repeat as it’s the same as the output from multipath -v2 up above) that my various paths have been combined into virtual devices called /dev/mapper/mpath<number> based on the WWIDs of the LUNs they correspond to. It would be good practice however to setup aliases in the multipath.conf file to give each one of these virtual devices a clearer name. I can choose something like /dev/mapper/violin_lun<number> or I could get really into it and start calling them things like /dev/mapper/DATA1 (based on their intended use in ASM). I think I’ll stick with the former. So each LUN will have an entry in the multipath.conf file which looks like this:

multipaths {

multipath {

wwid SVIOLIN_SAN_ARRAY_BAFEFD2A8C2E2817

alias violin_lun1

}

multipath {

wwid SVIOLIN_SAN_ARRAY_BAFEFD2ACE3C949E

alias violin_lun2

}

...etc...

On the other hand, if I was not going to use ASMLib then I would definitely need to create these aliases because a) I would need to guarantee persistent naming (imagine what ASM would do if the devices changed name across reboots!), and b) I would need to set the ownership, group and permissions so that ASM could read and write to them:

multipaths {

multipath {

wwid SVIOLIN_SAN_ARRAY_BAFEFD2A8C2E2817

alias violin_lun1

uid 54321

gid 54322

mode 660

}

multipath {

wwid SVIOLIN_SAN_ARRAY_BAFEFD2ACE3C949E

alias violin_lun2

uid 54321

gid 54322

mode 660

}

...etc...

The uid and gid numbers obviously correspond to the software owner and group for the ASM instance – in a normal installation this would be oracle and dba, in a role-separation environment it would be grid and asmadmin. You could also use UDEV rules to change the ownership, group and permission – but really, why go through all the hassle when ASMLib will happily do it for you?

The last thing to do now is to flush the device mapper and order the multipather to pick up the new changes:

[root@oel57 ~]# multipath -F [root@oel57 ~]# multipath -v2 [root@oel57 ~]# ls -l /dev/mapper/vio* brw-rw---- 1 root disk 253, 2 Mar 16 16:59 /dev/mapper/violin_lun1 brw-rw---- 1 root disk 253, 3 Mar 16 16:59 /dev/mapper/violin_lun2 brw-rw---- 1 root disk 253, 4 Mar 16 16:59 /dev/mapper/violin_lun3 brw-rw---- 1 root disk 253, 5 Mar 16 16:59 /dev/mapper/violin_lun4 brw-rw---- 1 root disk 253, 11 Mar 16 16:59 /dev/mapper/violin_lun5 brw-rw---- 1 root disk 253, 8 Mar 16 16:59 /dev/mapper/violin_lun6 brw-rw---- 1 root disk 253, 7 Mar 16 16:59 /dev/mapper/violin_lun7 brw-rw---- 1 root disk 253, 10 Mar 16 16:59 /dev/mapper/violin_lun8 brw-rw---- 1 root disk 253, 9 Mar 16 16:59 /dev/mapper/violin_lun9

Install ASMLib

The next thing is to install ASMLib – although as I’ve said this isn’t mandatory. It is however quite a handy tool for device discovery, especially on clustered systems. Because I am running the Unbreakable Enterprise Kernel I do not need to install the kernel driver (it’s already built into the kernel) so I only need the library and the support package. The support package is available from the public yum server whilst the library has to be fetched from the ASMLib page on Oracle Technet and then installed locally:

[root@oel57 ~]# yum install oracleasm-support -y ... [root@oel57 ~]# yum localinstall oracleasmlib-2.0.4-1.el5.x86_64.rpm -y ... [root@oel57 ~]# yum list oracleasm\* Installed Packages oracleasm-support.x86_64 2.1.7-1.el5 installed oracleasmlib.x86_64 2.0.4-1.el5 installed

I’ll configure ASMLib to run at startup and create block devices owned by oracle with a group of dba:

[root@oel57 ~]# /etc/init.d/oracleasm configure

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets ('[]'). Hitting <ENTER> without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: oracle

Default group to own the driver interface []: dba

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [y]: y

Writing Oracle ASM library driver configuration: done

Initializing the Oracle ASMLib driver: [ OK ]

Scanning the system for Oracle ASMLib disks: [ OK ]

I want ASMLib to look at the devices created by the device mapper (/dev/dm*) and not the default SCSI devices (/dev/sd*) so I need to edit the /etc/sysconfig/oracleasm file and then restart the driver:

[root@oel57 sysconfig]# vi oracleasm

I’ll add “dm” to the ORACLEASM_SCANORDER line and then add “sd” to the ORACLEASM_EXCLUDEORDER so that those devices are ignored:

# ORACLEASM_SCANORDER: Matching patterns to order disk scanning ORACLEASM_SCANORDER="dm" # ORACLEASM_SCANEXCLUDE: Matching patterns to exclude disks from scan ORACLEASM_SCANEXCLUDE="sd"

I have repeatedly seen an issue with this /etc/sysconfig/oracleasm file where two versions get created with different contents. The way it appears to work is that when you install the oracleasm-support package a default configuration file is created at /etc/sysconfig/oracleasm. Next when you run the /etc/init.d/oracleasm configure command you will find that this file has been renamed to oracleasm-_dev_oracle and a symbolic link put in its place :

[root@oel57 sysconfig]# ls -l /etc/sysconfig/oracleasm* lrwxrwxrwx 1 root root 24 Mar 19 13:23 /etc/sysconfig/oracleasm -> oracleasm-_dev_oracleasm -rw-r--r-- 1 root root 778 Mar 19 13:26 /etc/sysconfig/oracleasm-_dev_oracleasm

I do not know why Oracle chooses to do this or why it occasionally breaks, but I have regularly returned to this directory to discover that both the oracleasm and oracleasm-_dev_oracle files are now regular files, i.e. there are two copies possibly with differing contents. The symptom is often that after a reboot the ASMLib disks are no longer discoverable by ASM. The way to fix it appears to be to make sure the oracleasm-_dev_oracle file has the correct contents and then replace the oracleasm file with a symbolic link again.

Once I have configured the SCANORDER and SCANEXCLUDE strings I need to restart the ASMLib driver:

[root@oel57 sysconfig]# /etc/init.d/oracleasm restart Dropping Oracle ASMLib disks: [ OK ] Shutting down the Oracle ASMLib driver: [ OK ] Initializing the Oracle ASMLib driver: [ OK ] Scanning the system for Oracle ASMLib disks: [ OK ]

Now I can get on with stamping the disks. I will create eight disks for my +DATA diskgroup and use the ninth for +RECO:

[root@oel57 ~]# for lun in `echo 1 2 3 4 5 6 7 8`; do > oracleasm createdisk DATA$lun /dev/mapper/violin_lun$lun > done Writing disk header: done Instantiating disk: done Writing disk header: done Instantiating disk: done Writing disk header: done Instantiating disk: done Writing disk header: done Instantiating disk: done Writing disk header: done Instantiating disk: done Writing disk header: done Instantiating disk: done Writing disk header: done Instantiating disk: done Writing disk header: done Instantiating disk: done [root@oel57 ~]# oracleasm createdisk RECO /dev/mapper/violin_lun9 Writing disk header: done Instantiating disk: done [root@oel57 ~]# ls -l /dev/oracleasm/disks total 0 brw-rw---- 1 oracle dba 253, 2 Mar 18 15:18 DATA1 brw-rw---- 1 oracle dba 253, 3 Mar 18 15:18 DATA2 brw-rw---- 1 oracle dba 253, 4 Mar 18 15:18 DATA3 brw-rw---- 1 oracle dba 253, 5 Mar 18 15:18 DATA4 brw-rw---- 1 oracle dba 253, 11 Mar 18 15:18 DATA5 brw-rw---- 1 oracle dba 253, 8 Mar 18 15:18 DATA6 brw-rw---- 1 oracle dba 253, 7 Mar 18 15:18 DATA7 brw-rw---- 1 oracle dba 253, 10 Mar 18 15:18 DATA8 brw-rw---- 1 oracle dba 253, 9 Mar 18 15:18 RECO

Install Grid Infrastructure

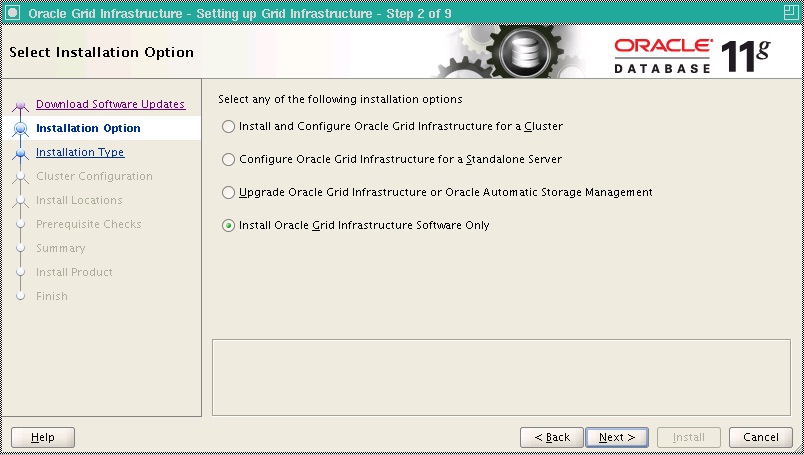

At this point I will install the 11.2.0.3 Grid Infrastructure software. However, I want to build my diskgroups with the SECTOR_SIZE=4k clause, which the OUI default installation of ASM does not have an option for… so I will build the ASM instance manually, which means performing a software-only install.

Important Note: You do not need to do this in order to achieve maximum performance from a 4k-based system such as Violin – see here for more details. Nothing from this point on is absolutely necessary, so if it looks like too much trouble simply make sure that the Violin LUNs are exported as 512 byte, then perform a normal Grid Infrastructure install. When finished, skip down to the bottom of this article to read the section entitled “Next Steps…”

As part of the software-only install you are asked to run the root.sh – the output from this then contains a message indicating how to setup Grid Infrastructure for either a Stand-Alone Server or for a Cluster. I’m doing this on a stand alone server:

[root@oel57 ~]# /u01/app/11.2.0.3/grid/perl/bin/perl -I/u01/app/11.2.0.3/grid/perl/lib -I/u01/app/11.2.0.3/grid/crs/install /u01/app/11.2.0.3/grid/crs/install/roothas.pl Using configuration parameter file: /u01/app/11.2.0.3/grid/crs/install/crsconfig_params Creating trace directory User ignored Prerequisites during installation LOCAL ADD MODE Creating OCR keys for user 'oracle', privgrp 'oinstall'.. Operation successful. LOCAL ONLY MODE Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. CRS-4664: Node oel57 successfully pinned. Adding Clusterware entries to inittab oel57 2012/03/18 20:06:25 /u01/app/11.2.0.3/grid/cdata/oel57/backup_20120318_200625.olr Successfully configured Oracle Grid Infrastructure for a Standalone Server

And now start up the css daemon:

[oracle@oel57]$ /u01/app/11.2.0.3/grid/bin/crsctl start resource ora.cssd CRS-2672: Attempting to start 'ora.cssd' on 'oel57' CRS-2672: Attempting to start 'ora.diskmon' on 'oel57' CRS-2676: Start of 'ora.diskmon' on 'oel57' succeeded CRS-2676: Start of 'ora.cssd' on 'oel57' succeeded

Create ASM Instance

First of all we need a parameter file. My Violin Memory array has an option on the LUN creation screen to display the LUN sector size to the operating system as either 512 bytes or 4k, despite the fact that the actual sector size is always 4k. This option exists because many operating systems and kernels cannot cope with the 4k sector size – as it happens my kernel, since it is based on 2.6.32, can cope with 4k but since the LUNs were created with the 512 byte option that is what it is seeing.

The outcome of this is that when I tell ASM the sector size is 4k it will check with the operating system to confirm this – and of course the operating system will say no the sector size is 512. To bypass this issue I can either recreate the LUNs or just set the hidden parameter _disk_sector_size_override to true, which tells ASM to ignore the OS and get on with it.

First I need to setup the environment for my new ASM instance:

[oracle@oel57]$ ls -l /etc/oratab -rw-rw-r-- 1 oracle oinstall 741 Mar 18 21:11 /etc/oratab [oracle@oel57]$ echo "+ASM:/u01/app/11.2.0.3/grid:N" >> /etc/oratab [oracle@oel57]$ . oraenv ORACLE_SID = [oracle] ? +ASM The Oracle base has been set to /u01/app/oracle

Now I can create the parameter file:

[oracle@oel57]$ mkdir -p /u01/app/oracle/admin/+ASM/pfile [oracle@oel57]$ vi /u01/app/oracle/admin/+ASM/pfile/init+ASM.ora

So my ASM spfile will look like this:

_disk_sector_size_override=TRUE asm_power_limit=5 diagnostic_dest='/u01/app/oracle' instance_type='ASM' large_pool_size=30M memory_target=350M remote_login_passwordfile='EXCLUSIVE'

I’m just going to start up a simple listener using CRS:

[oracle@oel57]$ srvctl add listener -l LISTENER [oracle@oel57]$ srvctl start listener -l LISTENER

Now I can start up an ASM instance:

[oracle@oel57]$ cd $ORACLE_HOME/dbs [oracle@oel57]$ ln -s /u01/app/oracle/admin/+ASM/pfile/init+ASM.ora [oracle@oel57]$ sqlplus / as sysasm SQL*Plus: Release 11.2.0.3.0 Production on Sun Mar 18 21:33:25 2012 Copyright (c) 1982, 2011, Oracle. All rights reserved. Connected to an idle instance. SQL> startup ASM instance started Total System Global Area 367439872 bytes Fixed Size 2228464 bytes Variable Size 340045584 bytes ASM Cache 25165824 bytes ORA-15110: no diskgroups mounted

It’s right about that, I need to create a diskgroup. But first I need to add ASM as a resource in CRS, for which I need to create an spfile:

SQL> create spfile from pfile; create spfile from pfile * ERROR at line 1: ORA-29786: SIHA attribute GET failed with error [Attribute 'SPFILE' sts[200] lsts[0]]

This is an error caused by ASM not being registered in CRS so I need to go and register it, although I don’t have all the details I need to do it right at the moment:

[oracle@oel57]$ srvctl add asm [oracle@oel57]$ srvctl config asm ASM home: /u01/app/11.2.0.3/grid ASM listener: LISTENER Spfile: ASM diskgroup discovery string: ++no-value-at-resource-creation--never-updated-through-ASM++

At least now I can create the spfile:

SQL> create spfile='/u01/app/oracle/admin/+ASM/pfile/spfile+ASM.ora' from pfile; File created.

And then go back and update CRS with the details of this spfile – also I need to amend the pfile in the $ORACLE_HOME/dbs directory to point to this:

[oracle@oel57]$ rm /u01/app/11.2.0.3/grid/dbs/init+ASM.ora [oracle@oel57]$ echo "SPFILE=/u01/app/oracle/admin/+ASM/pfile/spfile+ASM.ora" > init+ASM.ora [oracle@oel57]$ srvctl modify asm -p /u01/app/oracle/admin/+ASM/pfile/spfile+ASM.ora [oracle@oel57]$ srvctl config asm ASM home: /u01/app/11.2.0.3/grid ASM listener: LISTENER Spfile: /u01/app/oracle/admin/+ASM/pfile/spfile+ASM.ora ASM diskgroup discovery string: ++no-value-at-resource-creation--never-updated-through-ASM++

While I’m here I will also create a password file for the ASM instance:

[oracle@oel57]$ cd /u01/app/11.2.0.3/grid/dbs [oracle@oel57]$ orapwd file=orapw+ASM password=oracle [oracle@oel57]$ ls -l orapw+ASM -rw-r----- 1 oracle oinstall 1536 Mar 19 14:02 orapw+ASM

When I create the database it will require a user such as ASMSNMP with SYSDBA privileges (which of course in 11gR2 is not the highest privilege in ASM, that honour falling to SYSASM):

SQL> create user asmsnmp identified by oracle; User created. SQL> grant sysdba to asmsnmp; Grant succeeded.

The final step now is to restart ASM so that it picks up the spfile – and it is also a good way to check that Clusterware is configured properly:

[oracle@oel57]$ srvctl stop asm

[oracle@oel57]$ srvctl start asm

[oracle@oel57]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE oel57

ora.asm

ONLINE ONLINE oel57 Started

ora.ons

OFFLINE OFFLINE oel57

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.cssd

1 ONLINE ONLINE oel57

ora.diskmon

1 OFFLINE OFFLINE

ora.evmd

1 ONLINE ONLINE oel57

Create ASM Diskgroups

Now at this point the ASM_DISKSTRING parameter is null, which means by default it is using “ORCL:*” i.e. going via the ASMLib driver to discover disks. In my experience this does not always work, it depends very much on the kernel version, the ASMLib driver version and possibly the phases of the moon. The kfod utility will tell us one way or another:

[oracle@oel57]$ which kfod

/u01/app/11.2.0.3/grid/bin/kfod

[oracle@oel57]$ kfod cluster=false asm_diskstring='ORCL:*'

--------------------------------------------------------------------------------

Disk Size Path User Group

================================================================================

1: 102400 Mb ORCL:DATA1

2: 102400 Mb ORCL:DATA2

3: 102400 Mb ORCL:DATA3

4: 102400 Mb ORCL:DATA4

5: 105472 Mb ORCL:DATA5

6: 105472 Mb ORCL:DATA6

7: 105472 Mb ORCL:DATA7

8: 105472 Mb ORCL:DATA8

9: 102400 Mb ORCL:RECO

--------------------------------------------------------------------------------

ORACLE_SID ORACLE_HOME

================================================================================

+ASM /u01/app/11.2.0.3/grid

Perfect, my disks are visible, which means if I query the v$asm_disk dynamic view in ASM I should be able to see them:

SQL> select disk_number, header_status, state, label, path from v$asm_disk

2 order by disk_number;

DISK_NUMBER HEADER_STATUS STATE LABEL PATH

----------- ------------- -------- ---------- ----------

0 PROVISIONED NORMAL DATA1 ORCL:DATA1

1 PROVISIONED NORMAL DATA2 ORCL:DATA2

2 PROVISIONED NORMAL DATA3 ORCL:DATA3

3 PROVISIONED NORMAL DATA4 ORCL:DATA4

4 PROVISIONED NORMAL DATA5 ORCL:DATA5

5 PROVISIONED NORMAL DATA6 ORCL:DATA6

6 PROVISIONED NORMAL DATA7 ORCL:DATA7

7 PROVISIONED NORMAL DATA8 ORCL:DATA8

8 PROVISIONED NORMAL RECO ORCL:RECO

So for me it has worked and I am able to access the disks via ASMLib. This gives a performance improvement because the use of ASMLib reduces the number of file descriptors required by each database. However, if it does not work then you will need to set ASM_DISKSTRING to /dev/oracleasm/disks and recheck with kfod:

[oracle@oel57]$ kfod cluster=false asm_diskstring='/dev/oracleasm/disks'

--------------------------------------------------------------------------------

Disk Size Path User Group

================================================================================

1: 102400 Mb /dev/oracleasm/disks/DATA1 oracle dba

2: 102400 Mb /dev/oracleasm/disks/DATA2 oracle dba

3: 102400 Mb /dev/oracleasm/disks/DATA3 oracle dba

4: 102400 Mb /dev/oracleasm/disks/DATA4 oracle dba

5: 105472 Mb /dev/oracleasm/disks/DATA5 oracle dba

6: 105472 Mb /dev/oracleasm/disks/DATA6 oracle dba

7: 105472 Mb /dev/oracleasm/disks/DATA7 oracle dba

8: 105472 Mb /dev/oracleasm/disks/DATA8 oracle dba

9: 102400 Mb /dev/oracleasm/disks/RECO oracle dba

--------------------------------------------------------------------------------

ORACLE_SID ORACLE_HOME

================================================================================

+ASM /u01/app/11.2.0.3/grid

Of course it may be that you decided not to install ASMLib in the first place in which case you will need to point ASM_DISKSTRING at the location of your block devices (which you need to ensure have the correct ownership, group and permissions for ASM to use)

I’m going to create by DATA diskgroup now spread over eight LUNs with external redundancy. Note the sector size clause and the fact that compatibility has to be set to 11.2 for that clause to work:

SQL> CREATE DISKGROUP DATA 2 EXTERNAL REDUNDANCY 3 DISK 'ORCL:DATA1','ORCL:DATA2','ORCL:DATA3','ORCL:DATA4', 4 'ORCL:DATA5','ORCL:DATA6','ORCL:DATA7','ORCL:DATA8' 5 ATTRIBUTE 6 'au_size'='64M', 7 'sector_size'='4096', 8 'compatible.asm' = '11.2', 9 'compatible.rdbms' = '11.2'; Diskgroup created.

I also need a RECO diskgroup in which to place the fast recovery area (again with the sector_size clause):

SQL> CREATE DISKGROUP RECO 2 EXTERNAL REDUNDANCY 3 DISK 'ORCL:RECO' 4 ATTRIBUTE 5 'sector_size'='4096', 6 'compatible.asm' = '11.2', 7 'compatible.rdbms' = '11.2'; Diskgroup created.

That’s it, both diskgroups are there now and I can see the sector size if I query the attributes or just look at the output of lsdg in asmcmd:

[oracle@oel57]$ asmcmd ASMCMD> lsdg State Type Rebal Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name MOUNTED EXTERN N 4096 4096 67108864 828416 826880 0 826880 0 N DATA/ MOUNTED EXTERN N 4096 4096 1048576 105472 105420 0 105420 0 N RECO/

Next Steps…

We now have a working system with UDEV and multipathing correctly configured, as well as Oracle Grid Infrastructure up and running. That’s the end of the this cookbook, but probably not the end of your journey as you will most likely want to install the database software too. The installation of the database using a 4k sector size is covered in the following cookbook – enjoy!:

Installing Oracle 11.2.0.3 single-instance using 4k sector size

a very good tutorial

Thanks for your marvelous posting! I seriously enjoyed reading it, you are

a great author.I will always bookmark your blog and will often come back in the future.

I want to encourage yourself to continue your great writing, have a

nice holiday weekend!