The Biggest Gap In The Clouds? High Performance RDBMS

December 13, 2021 Leave a comment

Over the course of the last few blog posts, we’ve looked at how an increasing number of database workloads are migrating to the cloud, how there is more than one path to get there… and why overprovisioning is one of the biggest challenges to overcome.

We’re talking about business-critical application workloads here: big, complex, demanding, mission-critical, sensitive, performance-hungry… When on-prem, they are almost certainly running on dedicated, high-end infrastructure. And that’s a potential issue when you then migrate them to run on “someone else’s computer“.

As we’ve discussed before, the cloud is really a big pool of discrete resources and services, all of which are available on demand. You want a managed PostgreSQL instance? Click! It’s yours. You want three hundred virtual machines on which you can install your own software? Clickety-click! Off you go. If you’ve got the budget, the cloud has got a way for you to spend it. But underneath it all, whether you are using PaaS databases supplied by the cloud provider or installing the database software on IaaS systems, you are sharing that infrastructure – and the available performance – with the rest of the world.

Cloud Outcomes: Optimization versus Modernization

For some database workloads moving to the cloud, the modernization path will be the best fit, which means they will likely move to Platform-as-a-Service solutions where the day-to-day management of the database, operating system and infrastructure is taken care of by the cloud provider. Some examples of this path: on-prem SQL Server databases moving to Azure SQL Managed Instances; Oracle Databases moving to AWS’s Oracle RDS solution, etc.

But there is usually a certain class of database workload which doesn’t easily fit into these pre-packaged PaaS solutions: the big, the complex, the gnarly… the monsters of your data centre. And they inevitably end up in Infrastructure-as-a-Service… or stuck on-prem. For customers choosing the IaaS route (the “optimization path” in cloud-speak), the cloud provider manages the infrastructure but the customer is still responsible for the database and operating system.

Obviously, IaaS has a higher management overhead than PaaS, but often the journey to IaaS is simpler (essentially more of a lift and shift approach), while PaaS solutions often require a more complex migration. Especially with some cloud providers, where the recommended PaaS solution is actually a different database product entirely (for example, Oracle customers moving to Google Cloud or Microsoft Azure will be recommended by those cloud providers to move to Cloud SQL and Managed PostgreSQL respectively).

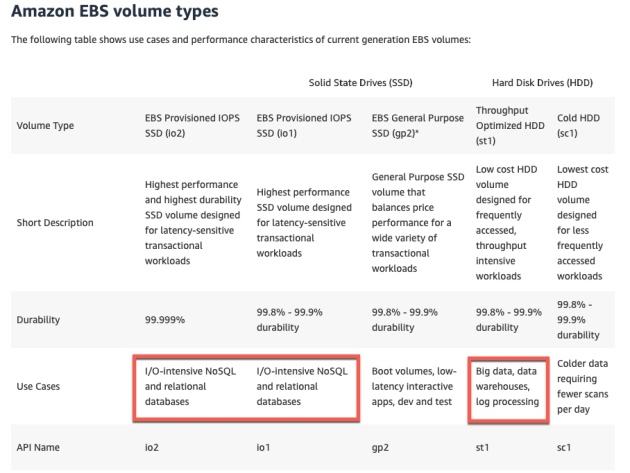

I/O Performance Is The Biggest Challenge

My view is that PaaS solutions are the best path for all appropriate workloads, but there will always be some outliers which need to move to IaaS. Almost by definition, those are the most high-profile, demanding, expensive, revenue-affecting… in fact… the most interesting workloads. And in all the cases I’ve seen*, I/O performance has been the limiting factor.

It’s relatively easy to get a lot of compute power in the cloud. But as soon as you start ramping up the amount of data you need to read and write, or demanding that those reads and writes have very fast, predictable response times, you hit problems. In other words, if latency, IOPS or throughput are your metrics of choice, you’d better be ready to start doing unnatural things.

It’s relatively easy to get a lot of compute power in the cloud. But as soon as you start ramping up the amount of data you need to read and write, or demanding that those reads and writes have very fast, predictable response times, you hit problems. In other words, if latency, IOPS or throughput are your metrics of choice, you’d better be ready to start doing unnatural things.

And it’s not necessarily the case that your required level of performance cannot be achieved. Often, it’s more correct to say that your required levels of performance cannot be achieved at an acceptable cost. Because it turns out that the following statement is just as true in the cloud as it ever was on-prem:

Performance and Cost are two sides of the same coin…

This is why I believe that the biggest gap in the cloud providers’ product portfolios today is in the area of high performance relational databases: primarily Oracle Database and Microsoft SQL Server. The PaaS solutions are designed for the average workloads, not the high-end. A complex database running on, for example, Oracle Exadata will struggle to run on a vanilla IaaS deployment – while the refactoring required to take that database and migrate it to Managed PostreSQL is almost unimaginable.

And so, now, we have finally come to the point: the Silk Platform. I have spent the last few years at Silk bringing to market a solution specifically for this problem. Over the next few posts, I’m going to explain what Silk is and how we built our solution to deliver very high performance for databases in the cloud.

But you won’t have to rely on just my word for it. I have backup 🙂

* I work primarily with customers of Microsoft Azure and Google Cloud

One outcome of this “unique” position was that many DBAs had to learn skills outside of their core profession (networking, Linux or Windows admin skills, SQL tuning, PL/SQL decoding, hostage negotiation etc). I’d love to say this thirst for knowledge was due to professional pride, but the best DBAs I ever met simply learned these skills so they could prove they weren’t in the wrong and thus get an easier life. “Oh you think your SQL runs slow because of my database huh? Well if you rewrote it like this, it runs in 10% of the time and doesn’t make all the lights go dim in the data centre, you imbecile…”

One outcome of this “unique” position was that many DBAs had to learn skills outside of their core profession (networking, Linux or Windows admin skills, SQL tuning, PL/SQL decoding, hostage negotiation etc). I’d love to say this thirst for knowledge was due to professional pride, but the best DBAs I ever met simply learned these skills so they could prove they weren’t in the wrong and thus get an easier life. “Oh you think your SQL runs slow because of my database huh? Well if you rewrote it like this, it runs in 10% of the time and doesn’t make all the lights go dim in the data centre, you imbecile…”