One consequence of my job is that I spend a lot of time looking at Oracle Automatic Workload Repository reports, specifically at information about I/O. I really do mean a lot of time (honestly, I’m not kidding, I have had dreams about AWR reports). One thing that comes up very frequently is the confusion relating to how the measurements of IOPS and throughput are displayed in the AWR report Load Profile section. The answer, is that they aren’t. Well, not exactly… let me explain.

Physical Read and Write I/O

Right at the top of an AWR report, just after the Database and Host details, you’ll find the familiar Load Profile section. Until recently, it had changed very little through the releases of Oracle since its introduction in 10g. Here’s a sample from 11g Release 2:

Load Profile Per Second Per Transaction Per Exec Per Call

~~~~~~~~~~~~ --------------- --------------- ---------- ----------

DB Time(s): 44.1 0.4 0.07 1.56

DB CPU(s): 1.6 0.0 0.00 0.06

Redo size: 154,034,644.3 1,544,561.0

Logical read: 154,436.1 1,548.6

Block changes: 82,491.9 827.2

Physical reads: 150.6 1.5

Physical writes: 18,135.2 181.9

User calls: 28.3 0.3

Parses: 142.7 1.4

Hard parses: 7.5 0.1

W/A MB processed: 2.1 0.0

Logons: 0.1 0.0

Executes: 607.7 6.1

Rollbacks: 0.0 0.0

Transactions: 99.7

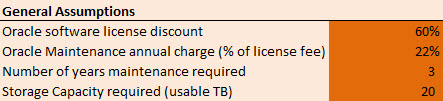

In my role I have to look at the amount of I/O being driven by a database, so I can size a solution based on flash memory. This means knowing two specific metrics: the number of I/Os per second (IOPS) and the throughput (typically measured in MB/sec). I need to know these values for both read and write I/O so that I can understand the ratio. I also want to understand things like the amount of random versus sequential I/O, but that’s beyond the scope of this post.

The first thing to understand is that none of this information is shown above. There are values for Physical reads and Physical writes but these are actually measured in database blocks. Even if we knew the block size (which we don’t because Oracle databases can have multiple block sizes) we do not know how many I/Os were required. Ten Oracle blocks could be written in one sequential I/O or ten individual “random” I/Os, completely changing the IOPS measurement. To find any of this information we have to descend into the depths of the AWR report to find the Instance Activity Stats section.

In Oracle 12c, the format of the AWR report changed, especially the AWR Load Profile section, which was modified to show the units that each measurement uses. It also includes some new lines such as Read/Write IO Requests and Read/Write IO. Here’s a sample from a 12c database (taken during a 30 second run of SLOB):

Load Profile Per Second Per Transaction Per Exec Per Call

~~~~~~~~~~~~~~~ --------------- --------------- --------- ---------

DB Time(s): 44.1 0.4 0.07 1.56

DB CPU(s): 1.6 0.0 0.00 0.06

Redo size (bytes): 154,034,644.3 1,544,561.0

Logical read (blocks): 154,436.1 1,548.6

Block changes: 82,491.9 827.2

Physical read (blocks): 150.6 1.5

Physical write (blocks): 18,135.2 181.9

Read IO requests: 150.3 1.5

Write IO requests: 15,096.9 151.4

Read IO (MB): 1.2 0.0

Write IO (MB): 141.7 1.4

User calls: 28.3 0.3

Parses (SQL): 142.7 1.4

Hard parses (SQL): 7.5 0.1

SQL Work Area (MB): 2.1 0.0

Logons: 0.1 0.0

Executes (SQL): 607.7 6.1

Rollbacks: 0.0 0.0

Transactions: 99.7

Now, you might be forgiven for thinking that the values highlighted in red and blue above tell me the very IOPS and throughput information I need. If this were the case, we could say that this system performed 150 physical read IOPS and 15k write IOPS, with throughput of 1.2 MB/sec reads and 141.7 MB/sec writes. Right?

But that isn’t the case – and to understand why, we need to page down five thousand times through the increasingly-verbose AWR report until we eventually find the Other Instance Activity Stats section (or just Instance Activity Stats in pre-12c reports) and see this information (edited for brevity):

Other Instance Activity Stats DB/Inst: ORCL/orcl Snaps: 7-8

-> Ordered by statistic name

Statistic Total per Second per Trans

-------------------------------- ------------------ -------------- -------------

physical read IO requests 5,123 150.3 1.5

physical read bytes 42,049,536 1,233,739.3 12,371.2

physical read total IO requests 37,162 1,090.3 10.9

physical read total bytes 23,001,900,544 674,878,987.9 6,767,255.2

physical read total multi block 21,741 637.9 6.4

....

physical write IO requests 514,547 15,096.9 151.4

physical write bytes 5,063,483,392 148,563,312.9 1,489,698.0

physical write total IO requests 537,251 15,763.0 158.1

physical write total bytes 18,251,309,056 535,495,967.4 5,369,611.4

physical write total multi block 18,152 532.6 5.3

The numbers in red and blue match up with those above, albeit with the throughput values using different units of bytes/sec instead of MB/sec. But the problem is, these aren’t the “total” values – which are highlighted in green. So what are those total values showing us?

Over to the Oracle Database 12c Reference Guide:

physical read IO requests: Number of read requests for application activity (mainly buffer cache and direct load operation) which read one or more database blocks per request. This is a subset of “physical read total IO requests” statistic.

physical read total IO requests: Number of read requests which read one or more database blocks for all instance activity including application, backup and recovery, and other utilities. The difference between this value and “physical read total multi block requests” gives the total number of single block read requests.

The values that don’t have the word total in them, i.e. the values shown in the AWR Profile section at the start of a report, are only showing what Oracle describes as “application activity“. That’s all very well, but it’s meaningless if you want to know how much your database is driving your storage. This is why the values with total in the name are the ones you should consider. And in the case of my sample report above, there is a massive discrepancy between the two: for example, the read throughput value for application activity is just 1.2 MB/sec while the total value is actually 644 MB/sec – over 500x higher! That extra non-application activity is definitely worth knowing about. (In fact, I was running a highly parallelised RMAN backup during the test just to make the point…)

[Note: There was another section here detailing how to find and include the I/O generated by redo into the totals, but after consultation with guru and legend Tanel Poder it’s come to my attention that this is incorrect. In fact, reads and writes to redo logs are included in the physical read/write total statistics…]

Oracle 12c IO Profile Section

Luckily, Oracle 12c now has a new section which presents all the information in one table. Here’s a sample extracted from the same report as above:

IO Profile Read+Write/Second Read/Second Write/Second

~~~~~~~~~~ ----------------- --------------- ---------------

Total Requests: 16,853.4 1,090.3 15,763.0

Database Requests: 15,247.2 150.3 15,096.9

Optimized Requests: 0.1 0.0 0.0

Redo Requests: 517.5 1.2 516.3

Total (MB): 1,154.3 643.6 510.7

Database (MB): 142.9 1.2 141.7

Optimized Total (MB): 0.0 0.0 0.0

Redo (MB): 295.7 0.0 295.7

Database (blocks): 18,285.8 150.6 18,135.2

Via Buffer Cache (blocks): 18,282.1 150.0 18,132.0

Direct (blocks): 3.7 0.6 3.1

Suddenly life is more simple. You want to know the total IOPS and throughput? It’s all in one place. You want to calculate the ratio of reads to writes? Just compare the read and write columns. Happy days.

One word of warning though: there are other database processes driving I/O which may not be tracked in these statistics. I see no evidence for control file reads and writes being shown, although these are insignificant in magnitude. More significant would be I/O from the archiver process for databases running in archive log mode, as each redo log must be sequentially read and re-written out as an archive log. Are these included? Yet another possibility would be the Recovery Writer (RVWR) process which is responsible for writing flashback logs when database flashback logging is enabled. [Discussions with Jonathan Lewis suggest these stats are all included – and let’s face it, he wrote the book on the subject…!] It all adds up… Oracle really needs to provide better clarity on what these statistics are measuring.

Conclusion

If you want to know how much I/O is being driven by your database, do not use the information in the Load Profile section of an AWR report. Use the I/O Profile section if available, or otherwise skip to the Instance Activity Stats section and look at the total values for physical reads and writes (and redo). Everything else is just lies, damned lies and (I/O) statistics.