All Flash Arrays: Active/Active versus Active/Passive

October 19, 2016 11 Comments

I want you to imagine that you are about to run a race. You have your trainers on, your pre-race warm up is complete and you are at the start line. You look to your right… and see the guy next to you, the one with the bright orange trainers, is hopping up and down on one leg. He does have two legs – the other one is held up in the air – he’s just choosing to hop the whole race on one foot. Why?

You can’t think of a valid reason so you call across, “Hey buddy… why are you running on one leg?”

His reply blows your mind: “Because I want to be sure that, if one of my legs falls off, I can still run at the same speed”.

Welcome, my friends, to the insane world of storage marketing.

High Availability Clusters

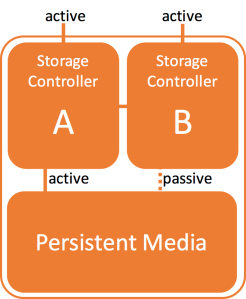

The principles of high availability are fairly standard, whether you are discussing enterprise storage, databases or any other form of HA. The basic premise is that, to maintain service in the event of unexpected component failures, you need to have at least two of everything. In the case of storage array HA, we are usually talking about the storage controllers which are the interfaces between the outside world and the persistent media on which data resides.

Ok so let’s start at the beginning: if you only have one controller then you are running at risk, because a controller failure equals a service outage. No enterprise-class storage array would be built in this manner. So clearly you are going to want a minimum of two controllers… which happens to be the most common configuration you’ll find.

So now we have two controllers, let’s call them A and B. Each controller has CPU, memory and so on which allow it to deliver a certain level of performance – so let’s give that an arbitrary value: one controller can deliver 1P of performance. And finally, let’s remember that those controllers cost money – so let’s say that a controller capable of giving 1P of performance costs five groats.

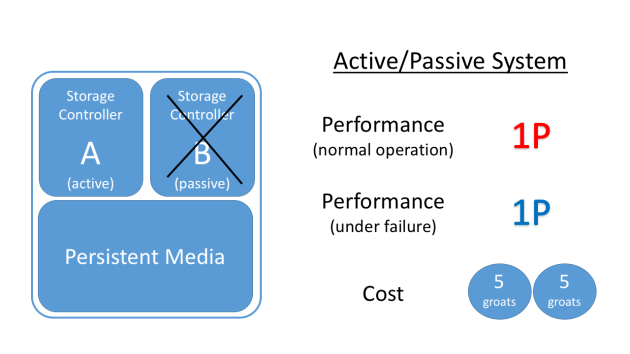

Active/Passive Design

In a basic active/passive design, one controller (A) handles all traffic while the other (B) simply sits there waiting for its moment of glory. That moment comes when A suffers some kind of failure – and B then leaps into action, immediately replacing A by providing the same service. There might be a minor delay as the system performs a failover, but with multipathing software in place it will usually be quick enough to go unnoticed.

So what are the downsides of active/passive? There are a few, but the most obvious one is that you are architecturally limited to seeing only 50% of your total available performance. You bought two controllers (costing you ten groats!) which means you have 2P of performance in your pocket, but you will forever be limited to a maximum of 1P of performance under this design.

Active/Active Design

In an active/active architecture, both controllers (A and B) are available to handle traffic. This means that under normal operation you now have 2P of performance – and all for the same price of ten groats. Both the overall performance and the price/performance have doubled.

What about in a failure situation? Well, if controller A fails you still have controller B functioning, which means you are now down to 1P of performance. It’s now half the performance you are used to in this architecture, but remember that 1P is still the same performance as the active/passive model. Yes, that’s right… the performance under failure is identical for both designs.

What About The Cost?

Smart people look at technical criteria and choose the one which best fits their requirements. But really smart people (like my buddy Shai Maskit) remember that commercial criteria matter too. So with that in mind, let’s go back and consider those prices a little more. For ten groats, the active/active solution delivered performance of 2P under normal operation. The active/passive solution only delivered 1P. What happens if we attempt to build an active/passive system with 2P of performance?

To build an active/passive solution which delivers 2P of performance we now need to use bigger, more powerful controllers. Architecturally that’s not much of a challenge – after all, most modern storage controllers are just x86 servers and there are almost always larger models available. The problem comes with the cost. To paraphrase Shai’s blog on this same subject:

Cost of storage controller capable of 1P performance < Cost of storage controller capable of 2P performance

In other words, building an active/passive system requires more expensive hardware than building a comparable active/active system. It might not be double, as in my picture, but it will sure as hell be more expensive – and that cost is going to be passed on to the end user.

Does It Scale?

Another question that really smart people ask is, “How does it scale?”. So let’s think about what happens when you want to add more performance.

In an active/active design you have the option of adding more performance by adding more controllers. As long as your architecture supports the ability for all controllers to be active concurrently, adding performance is as simple as adding nodes into a cluster.

But what happens when you add a node to an active/passive solution? Nothing. You are architecturally limited to the performance of one controller. Adding more controllers just makes the price/performance even worse. This means that the only solution for adding performance to an active/passive system is to replace the controllers with more powerful versions…

The Pure Storage Architecture

Pure Storage is an All Flash Array vendor who knows how to play the marketing game better than most, so let’s have a look at their architecture. The PS All Flash Array is a dual-controller design where both controllers send and receive I/Os to the hosts. But… only one controller processes I/Os to and from the underlying persistent media (the SSDs). So what should we call this design, active/active or active/passive?

Pure Storage is an All Flash Array vendor who knows how to play the marketing game better than most, so let’s have a look at their architecture. The PS All Flash Array is a dual-controller design where both controllers send and receive I/Os to the hosts. But… only one controller processes I/Os to and from the underlying persistent media (the SSDs). So what should we call this design, active/active or active/passive?

According to an IDC white paper published on PS’s website, PS controllers are sized so that each controller can deliver 100% of the published performance of the array. The paper goes on to explain that under normal operation each controller is loaded to a maximum of 50% on the host side. This way, PS promises that performance under failure will be equal to the performance under normal operations.

In other words, as an architectural decision, the sum of the performance of both controllers can never be delivered.

So which of the above designs does that sound like to you? It sounds like active/passive to me, but of course that’s not going to help PS sell its flash arrays. Unsurprisingly, on the PS website the product is described as “active/active” at every opportunity.

Yet even PS’s chief talking head, Vaughn Stewart, has to ask the question, “Is the FlashArray an Active/Active or Active/Passive Architecture?” and eventually comes to the conclusion that, “Active/Active or Active/Passive may be debatable”.

There’s no debate in my view.

Conclusion

You will obviously draw your own conclusions on everything I’ve discussed above. I don’t usually pick on other AFA vendors during these posts because I’m aiming for an educational tone rather than trying to fling FUD. But I’ll be honest, it pisses me off when vendors appear to misuse technical jargon in a way which conveniently masks their less-glamorous architectural decisions.

My advice is simple. Always take your time to really look into each claim and then frame it in your own language. It’s only then that you’ll really start to understand whether something you read about is an innovative piece of design from someone like PS… or more likely just another load of marketing BS.

* Many thanks to my colleague Rob Li for the excellent running-on-one-leg metaphor

thanks for this post.

Hi, Dimitris from Nimble Storage here.

The logical fallacy this article is based on is that all controllers are the same speed.

That’s not even remotely close to the truth. Each vendor has a very different architecture so often the active/standby of one vendor might be as fast as 2 controllers from another vendor working at 100%.

The real problem with active/active is that it can lead to unacceptable performance in the event of a failure.

If I’m used to my application running at 1ms and after failover the latency is several times higher (you’re delusional if you think it merely doubles, A/A systems that are maxed out don’t work like that), then that’s not always an acceptable business outcome.

The safe and desirable business outcome is for performance to be the same no matter what’s happening.

The only time this isn’t true:

If the solution has a way to prioritize the critical workloads in the event of a failover so they get exactly the same performance as before.

Thx

D

Thanks Dimitris, that’s very interesting. I’ve never really bothered looking into Nimble’s architecture so I confess I wasn’t aware that you used an Active/Passive approach. I presume that since Nimble’s architecture was designed for a hybrid (disk+flash) approach there would have been no need to go the extra mile and implement a high performance Active/Active solution.

Also check this

http://recoverymonkey.org/2016/08/22/the-importance-of-automated-headroom-management/

There is nothing like application/buissness will become spoilt if supplied with high performance at one time (99% of the time) and reduced performance at other time. What matters is, to make sure that the reduced performance doesn’t timeout the application. If the application is supplied with high performance at normal time, that is good for buissness. 99.9% of the time, system should be running in normal mode.

Hi ,

I am not from a storage background but I am a user. Firstly I haven’t come across a system that has more than 2 controllers. Is it because I play at the lower end of the storage spectrum or are there actually systems with more than 2 controllers. Also for this to be valid all the controllers should be capable of sharing the same disks either concurrently in case of active or after some form of fail over in case of active-passive.

My second query is how do you characterize arrays that are dual active. I.e a single volume can be mastered by any one of the two controllers but not both simultaneously. Is this indistinguishable from a true active active that allows both controllers to simultanously service a single volume. Are there any arrays that actually support this kind of architecture. i assume this would increase lock traffic between the two controllers.

> I am not from a storage background but I am a user.

We are all users of storage in some way 🙂

> Firstly I haven’t come across a system that has more than 2 controllers. Is it because I play at the lower end of the storage spectrum or are there actually systems with more than 2 controllers. Is it because I play at the lower end of the storage spectrum or are there actually systems with more than 2 controllers.

There are many storage platforms which have more than two active controllers. Kaminario, for example, or EMC VMAX. In fact, pretty much all of the high-end legacy disk arrays were build as multi-controller architectures. High-end enterprise storage tends to demand it.

> Also for this to be valid all the controllers should be capable of sharing the same disks either concurrently in case of active or after some form of fail over in case of active-passive.

Not necessarily, since data is likely to be mirrored – therefore each controller only needs to have access to one mirror copy. Or another common option is to have pairs of controllers looking after pools of storage.

> My second query is how do you characterize arrays that are dual active. I.e a single volume can be mastered by any one of the two controllers but not both simultaneously. Is this indistinguishable from a true active active that allows both controllers to simultanously service a single volume. Are there any arrays that actually support this kind of architecture. i assume this would increase lock traffic between the two controllers.

I think you might be talking about ALUA (Asymmetric Logical Unit Access) as used by, for example, Violin Memory 7000 series arrays or many storage arrays architected for disk (e.g. EMC VNX). You can read more about that here:

http://kaminario.com/company/blog/active-active-vs-active-passive-storage-controllers/

HPE 3par 8xxx is as well sold as active/active controllers. They actually are but the controllers cannot both write to the same LUN. They can read both but only one controller can write to the LUN(s). Every time we look closer to a solution, the controllers aren’t really active/active (Pure, HPE …)

I don’t know of any 100% active/active controllers (full flash) on the market right now which are as affordable as the solutions proposed by Pure, HPE or Nimbus…

The Kaminario K2 is fully active/active across all controllers, as indeed is the EMC XtremIO All Flash Array (to my knowledge). As for affordability, it is totally inaccurate to think that active/passive solutions are more affordable than active/active, since the price is decided by a multitude of much more complex factors.

Pingback: Putting an End to the Active-Active vs. Active-Passive Debate - Kaminario

Pingback: Putting an End to the Active-Active vs Active-Passive Debate