The Final Post: Hardware Is Dead

June 27, 2019 6 Comments

Well, my friends, this is it. The time has come to retire the flashdba jersey after more than seven years of fun and frolics. In part one of this post, I looked back at my time in the All-Flash storage industry and marvelled at the crazy, Game of Thrones-style chaos that saw so many companies arrive, fight, merge, split up and burn out. Throughout that time, I wrote articles on this blog site which attempted to explain the technical aspects of All-Flash as the industry went from niche to mainstream. Like many technical bloggers, I found this writing process enjoyable and fulfilling, because it helped me put some order to my own thoughts on the subject. But back in 2017, something changed and my blogs became less and less frequent… eventually leading here. I’ll explain why in a minute, but first we need to talk about the title of this post.

Hardware Is Meh

Back in my Oracle days, I worked with a product called Exadata – a converged database appliance which Oracle marketed as “hardware and software engineered to work together”. For a time, Oracle’s “Engineered Systems” were the future of the company and, therefore, the epicentre of their marketing campaigns. Today? It’s all about the Oracle Cloud. And this is actually a perfect representation of the I.T. industry as a whole… because, here in 2019, nobody wants to talk about hardware anymore. Whether it’s hyper-converged systems, All-Flash storage, “Engineered” database appliances or basic server and networking infrastructure, hardware is just not cool anymore.

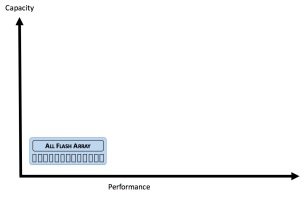

For a long time, companies have purchased hardware systems as a capital expense, the cost then being written off over a number of years, at which point the dreaded hardware refresh is required. Choosing the correct specifications of for hardware (capacity, performance, number of ports etc) has always been extremely challenging because business is unpredictable: buy too small and you will need to upgrade at some point down the line, which could be expensive; buy too big and you are overpaying for resources you may never use. And also, if you are a small company or a startup, those capital expenses can be very hard to fund while you wait for revenue to build.

For a long time, companies have purchased hardware systems as a capital expense, the cost then being written off over a number of years, at which point the dreaded hardware refresh is required. Choosing the correct specifications of for hardware (capacity, performance, number of ports etc) has always been extremely challenging because business is unpredictable: buy too small and you will need to upgrade at some point down the line, which could be expensive; buy too big and you are overpaying for resources you may never use. And also, if you are a small company or a startup, those capital expenses can be very hard to fund while you wait for revenue to build.

Today, nobody needs to do this anymore. The cloud – and in particular the public cloud – allows companies to consume exactly what they need, just when they need it – and fund it as an operating expense, with complete flexibility. One of the great joys of the public cloud is that hardware has been commoditised and abstracted to such a degree that you just don’t need to care about it anymore. Serverless, you might say… (IT has aways been fond of a ridiculous buzz word)

The Vendor View: AWS Is The New Enemy

For infrastructure vendors, the industry has reached a new tipping point. A few years ago, if you worked in sales for a storage startup (like me), you found business by targeting EMC customers who were unhappy with the prices they were paying / service they were getting / quality of steaks being bought for them by their EMC rep.  Ditto, to a lesser extent, with HP and IBM, but EMC was the big gorilla of the marketplace. Today, everybody in storage has a new number #1 enemy: Amazon Web Services, with Microsoft Azure and Google Cloud Platform making up the top three. But make no mistake, AWS is eating everybody’s lunch – and the biggest challenge for the rest is that in many customer’s eyes, the public cloud is Amazon Web Services. (EMC, meanwhile, doesn’t even exist anymore but is instead a part of Dell… that would have been impossible to imagine five years ago).

Ditto, to a lesser extent, with HP and IBM, but EMC was the big gorilla of the marketplace. Today, everybody in storage has a new number #1 enemy: Amazon Web Services, with Microsoft Azure and Google Cloud Platform making up the top three. But make no mistake, AWS is eating everybody’s lunch – and the biggest challenge for the rest is that in many customer’s eyes, the public cloud is Amazon Web Services. (EMC, meanwhile, doesn’t even exist anymore but is instead a part of Dell… that would have been impossible to imagine five years ago).

Cloud ≠ Public Cloud

However, nobody (sane) is predicting that 100% of workloads will end up in the public cloud (and let’s be honest now, when we say “public cloud” we basically mean AWS, Azure and GCP). For some companies – where I.T. is not their core business – it makes perfect sense to do everything in the cloud. But for others, various reasons relating to control, risk, performance, security and regulation will mean that at least some data remains on premises, in private or hybrid clouds. You can argue among yourselves about how much.

So, for those people who still require their own infrastructure, what now? Once you’ve seen how easy it is to use the public cloud, sampled all the rich functionality of AWS and fallen into the trap of having staff paying for AWS instances on their credit cards (so-called “Shadow IT“), how do you go back to the old days of five-year up-front capital investments into large boxes of tin which sit in the corner of your data centre and remain stubbornly inflexible?

Consumption-Based Infrastructure

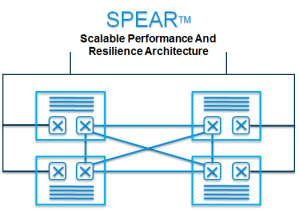

Ok let’s get to the conclusion. A couple of years ago, Kaminario (my employer) decided to exit the hardware business and become a software company. Like most (almost all) All-Flash storage vendors, Kaminario uses commodity whitebox components (basically, Intel x86 servers and enterprise-class SSDs) for the hardware chassis and then runs their own software on top to turn them into high-performance, highly-resilient and feature-rich storage platforms. Everybody does it: DellEMC’s XtremIO, Pure Storage, Kaminario, HP Nimble, NetApp… all of the differentiation in the AFA business is in software. So why purchase hardware components, manufacture and integrate them, keep them in inventory and then pass on all that extra cost to customers when your core business is actually software?

Kaminario decided to take a new route by disaggregating the hardware from the software and then handing over the hardware part to someone who already sells millions of hardware units all around the globe. Now, when you buy a Kaminario storage array, you get exactly the same physical appliance, but you (or your reseller) actually buy the hardware from Tech Data at commodity component cost. You then buy a consumption-based license to use the software from Kaminario based on the number of terabytes of data stored. This can be on a monthly Pay As You Go model or via a pre-paid subscription for a number of years. In a real sense, it is the cloud consumption model for people who require on-prem infrastructure.

There are all sorts of benefits to this (most customers never fill their storage arrays above 80% capacity, so why always pay for 100%?), but I’m not going to delve into them here because this is not a sales pitch, it’s an explanation for what I did next.

What I Did Next

Seeing as Kaminario decided to make a momentous shift, I thought it was a good time to make one of my own. So, two years ago, I took the decision to leave the world of technical presales and become a software sales executive. As in, a quota-carrying, non-technical, commercial sales guy with targets to hit and commission to earn. Presales people also earn commission, but are far more protected from the “lumpy” highs and lows that come with complex and lengthy high-value sales cycles (what sales people call “big ticket sales”). In commercial sales, the highs are higher and the lows are lower – and the risks are definitely riskier. Since my new role coincided with the company going through an entire change of business model, the risk was pretty hard to quantify, but I’m pleased to say that 2018 was the company’s best ever year, not just globally but also in the territory that I now manage (the United Kingdom).

More importantly for me, I’m now two years into this new journey and I have zero regrets about the decision to leave my technical past behind. I’ve learnt more than ever before (often the hard way) and I’ve experienced all the highs and lows one might expect, but I still get the same excitement from this role that I used to get in the early days of my technical career.

More importantly for me, I’m now two years into this new journey and I have zero regrets about the decision to leave my technical past behind. I’ve learnt more than ever before (often the hard way) and I’ve experienced all the highs and lows one might expect, but I still get the same excitement from this role that I used to get in the early days of my technical career.

So, the time is right to hang up the technical jersey and bid flashdba farewell. It’s been fun and I want to say thank you to everybody who read, commented, agreed or disagreed with my content. There are almost 200 posts and pages on this site which I will leave here in the hope that they remain useful to others – and as a sort of virtual monument to my former career.

In the meantime, I’ve got to go now, because there are meetings to be had, customers to be entertained, dinners to be expensed and (hopefully) deals to be closed. Farewell, my friends, stay in touch… and remember, if you need to buy something… call me, yeah?

— flashdba —

[September 2020 Spoiler Alert: I couldn’t stay away]

The automotive industry seems like a good example here. After over a century of using internal combustion engines, we are now at the point where electric vehicles are a

The automotive industry seems like a good example here. After over a century of using internal combustion engines, we are now at the point where electric vehicles are a