Have you seen the press recently? Or passed through an airport and seen the massive billboards advertising IT companies? I have – and I’ve learnt something from them: Engineered Systems are the best thing ever. I also know this because I read it on the Oracle website… and on the IBM website, although IBM likes to call them different names like “Workload Optimized Systems”. HP has its Converged Infrastructure, which is what Engineered Systems look like if you don’t make software. And even Microsoft, that notoriously hardware-free zone where software exists in a utopia unconstrained by nuts and bolts, has a SQL Server Appliance solution which it built with HP.

Have you seen the press recently? Or passed through an airport and seen the massive billboards advertising IT companies? I have – and I’ve learnt something from them: Engineered Systems are the best thing ever. I also know this because I read it on the Oracle website… and on the IBM website, although IBM likes to call them different names like “Workload Optimized Systems”. HP has its Converged Infrastructure, which is what Engineered Systems look like if you don’t make software. And even Microsoft, that notoriously hardware-free zone where software exists in a utopia unconstrained by nuts and bolts, has a SQL Server Appliance solution which it built with HP.

[I’m going to argue about this for a while, because that’s what I do. There is a summary section further down if you are pressed for time]

So clearly Engineered Systems are the future. Why? Well let’s have a look at the benefits:

Pre-Integration

It doesn’t make sense to buy all of the components of a solution and then integrate them yourself, stumbling across all sorts of issues and compatibility problems, when you can buy the complete solution from a single vendor. Integrating the solution yourself is the best of breed approach, something which seems to have fallout out of favour with marketing people in the IT industry. The Engineered Systems solution is pre-integrated, i.e. it’s already been assembled, tested and validated. It works. Other customers are using it. There is safety in the herd.

Optimization

In Oracle Marketing’s parlance, “Hardware and software, engineered to work together“. If the same vendor makes everything in the stack then there are more opportunities to optimize the design, the code, the integration… assumptions no longer need to be made, so the best possible performance can be squeezed out of the complete package.

Faster Deployment

Faster Deployment

Well… it’s already been built, right? See the Pre-Integration section above and think about all that time saved: you just need to wheel it in, connect up the power and turn it on. Simples.

Of course this isn’t completely the case if you also have to change the way your entire support organisation works in order to support the incoming technology, perhaps by retraining whole groups of operations staff and creating an entirely new specialised role to manage your new purchase. In fact, you could argue that the initial adoption of a technology like Exadata is so disruptive that it is much more complicated and resource-draining than building those best of breed solutions your teams have been integrating for decades. But once you’ve retrained all your staff, changed all your procedures, amended your security guidelines (so the DataBase Machine Administrator has access to all areas) and fended off the poachers (DBMAs get paid more than DBAs) you are undoubtedly in the perfect position to start benefiting from that faster deployment. Well done you.

And then there’s the migration from your existing platform, where (to continue with Exadata as an example) you have to upgrade your database to 11.2, migrate to Linux, convert to ASM, potentially change the endianness of your data and perhaps strip out some application hints in order to take advantage of features like Smart Scan. That work will probably take many times longer than the time saved by the pre-integration…

Single-Vendor Benefits

The great thing about having one vendor is that it simplifies the procurement process and makes support easier too – the infamous “One Throat To Choke” cliché.

Marketing Overdrive

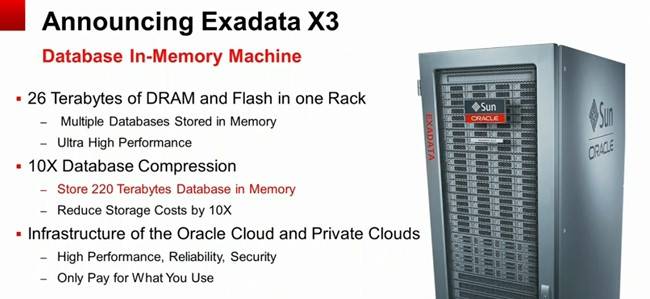

If you believe the hype, the engineered system is the future of I.T. and anyone foolish enough to ignore this “new” concept is going to be left behind. So many of the vendors are pushing hard on that message, but of course there is one particular company with an ultra-aggressive marketing department who stands out above the rest: the one that bet the farm on the idea. Let’s have a look at an example of their marketing material:

Video hosted by YouTube under Standard Terms of Service. Content owner: Oracle Corporation

Now this is all very well, but I have an issue with Engineered Systems in general and this video in particular. Oracle says that if you want a car you do not go and buy all the different parts from multiple, disparate vendors and then set about putting them together yourself. Leaving aside the fact that some brave / crazy people do just that, let’s take a second to consider this. It’s certainly true that most people do not buy their cars in part form and then integrate them, but there is an important difference between cars and the components of Oracle’s Engineered Systems range: variety.

If we pick a typical motor vehicle manufacturer such as Ford or BMW, how many ranges of vehicle do they sell? Compact, family, sports, SUV, luxury, van, truck… then in each range there are many models, each model comes in many variants with a huge list of options that can be added or taken away. Why is there such a massive variety in the car industry? Because choice and flexibility are key – people have different requirements and will choose the product most suitable to their needs.

Looking at Oracle’s engineered systems range, there are six appliances – of which three are designed to run databases: the Exadata Database Machine, the SuperCluster and the ODA. So let’s consider Exadata: it comes in two variants, the X3-2 and the X3-8. The storage for both is identical: a full rack contains 14x Exadata storage servers each with a standard configuration of CPUs, memory, flash cards and hard disk drives. You can choose between high performance or high capacity disk drives but everything else is static (and the choice of disk type affects the whole rack, not just the individual server). What else can you change? Not a lot really – you can upgrade the DRAM in the database servers and choose between Linux or Solaris, but other than that the only option is the size of the rack.

The Exadata X3-2 comes in four possible rack sizes: eighth, quarter, half and full; the X3-8 comes only as a full rack. These rack sizes take into account both the database servers and the storage servers, meaning the balance of storage to compute power is fixed. This is a critical point to understand, because this ratio of compute to storage will vary for each different real-world database. Not only that, but it will vary through time as data volumes grow and usage patterns change. In fact, it might even vary through temporal changes such as holiday periods, weekends or simply just the end of the day when users log off and batch jobs kick in.

Flexibility

Flexibility

And there’s the problem with the appliance-based solution. By definition it cannot be as flexible as the bespoke alternative. Sure I don’t want to construct my own car, but I don’t need to because there are so many options and varieties on the market. If the only pre-integrated cars available were the compact, the van and the truck I might be more tempted to test out my car-building skills. To continue using Exadata as the example, it is possible to increase storage capacity independent of the database node compute capacity by purchasing a storage expansion rack, but this is not simply storage; it’s another set of servers each containing two CPU sockets, DRAM, flash cards, an operating system and software, hard disks… and of course a requirement to purchase more Exadata licenses. You cannot properly describe this as flexibility if, as you increase the capacity of one resource, you lose control of many other resources. In the car example, what if every time I wanted to add some horsepower to the engine I was also forced to add another row of seats? It would be ridiculous.

Summary: Two Sides To Every Coin

Engineered Systems are a design choice. Like all choices they have pros and cons. There are alternatives – and those alternatives also have pros and cons. For me, the Engineered System is one end of a sliding scale where hardware and software are tightly integrated. This brings benefits in terms of deployment time and performance optimization, but at the expense of flexibility and with potential vendor-lockin. The opposite end of that same scale is the Software Defined Data Centre (SDDC), where hardware and software are completely independent: hardware is nothing more than a flexible resource which can be added or removed, controlled and managed, aggregated and pooled… The properties and characteristics of the hardware matter, but the vendor does not. In this concept, data centres will simply contain elastic resources such as compute, storage and networking – which is really just an extension of the cloud paradigm that everyone has been banging on about for some time now.

It’s going to be interesting to see how the engineered system concept evolves: whether it will adapt to embrace ideas such as the SDDC or whether your large, monolithic engineered system will simply become another tombstone in the corner of your data centre. It’s hard to say, but whatever you do I recommend a healthy dose of scepticism when you read the marketing brochure…

It’s going to be interesting to see how the engineered system concept evolves: whether it will adapt to embrace ideas such as the SDDC or whether your large, monolithic engineered system will simply become another tombstone in the corner of your data centre. It’s hard to say, but whatever you do I recommend a healthy dose of scepticism when you read the marketing brochure…

These are my thoughts and ideas – I’m not claiming them as facts. The data here is not real – it is my attempt at visualising my opinions based on experience and interaction with customers. I’m quite happy to argue my points and concede them in the face of contrary evidence. Of course I’d prefer to substantiate them with proof, but until I (or someone else) can devise a way of doing that, this is all I have. Feel free to add your voice one way or the other… and yes, I am aware that I suck at graphics.

These are my thoughts and ideas – I’m not claiming them as facts. The data here is not real – it is my attempt at visualising my opinions based on experience and interaction with customers. I’m quite happy to argue my points and concede them in the face of contrary evidence. Of course I’d prefer to substantiate them with proof, but until I (or someone else) can devise a way of doing that, this is all I have. Feel free to add your voice one way or the other… and yes, I am aware that I suck at graphics.

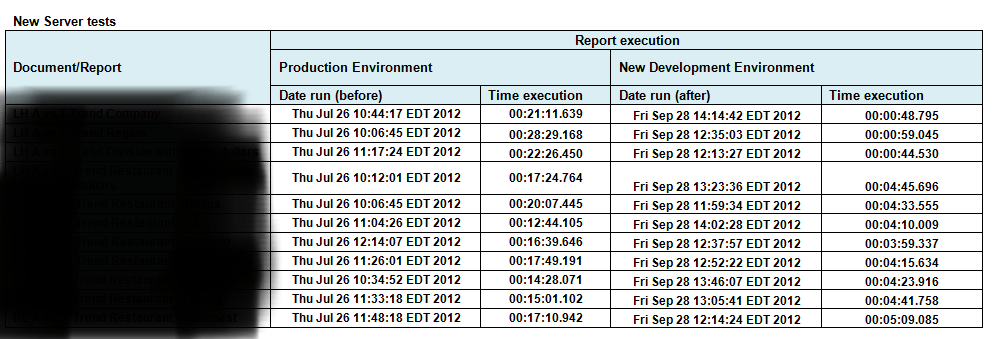

I asked how he wanted to run this rig, assuming there were no inhibitors. He wanted most of the memory allocated to Oracle, taking advantage of 11.2.0.3’s features and fixes. OK, then let’s set those and start diagnosing from there; no sense fixing a system running in a config you don’t want—especially since efforts to make the test system look and act like prod only with faster storage had all failed. A quick run through of the report test battery showed results similar to what they’d seen before. We broke it down to the smallest granule we could: run a single report, see the SQL it generates on the test system, compare its explain plan there to what production would do with it. This being my first run-in with

I asked how he wanted to run this rig, assuming there were no inhibitors. He wanted most of the memory allocated to Oracle, taking advantage of 11.2.0.3’s features and fixes. OK, then let’s set those and start diagnosing from there; no sense fixing a system running in a config you don’t want—especially since efforts to make the test system look and act like prod only with faster storage had all failed. A quick run through of the report test battery showed results similar to what they’d seen before. We broke it down to the smallest granule we could: run a single report, see the SQL it generates on the test system, compare its explain plan there to what production would do with it. This being my first run-in with  Sure there’s your PARALLEL_DEGREE_LIMIT, possibly affected by your core count and PARALLEL_THREADS_PER_CPU, and of course your options for PARALLEL_DEGREE_POLICY, plus PARALLEL_MAX_SERVERS which is derived from some unspecified mix of CPU_COUNT, PARALLEL_THREADS_PER_CPU and the PGA_AGGREGATE_TARGET. But have you run DBMS_RESOURCE_MANAGER.CALIBRATE_IO? Have a look over Automatic Degree of Parallelism in 11.2.0.2 [ID 1269321.1] to see if you might be limiting yourself parallel-wise because the database has no idea what your IO subsystem is capable of. Note that they do not mention workload system stats in this context. I couldn’t find verification that these play no part in DOP, but this note seems to suggest the values in DBA_RSRC_IO_CALIBRATE play a much more significant role now. Following a link about a bug (10180307) in 11.2.0.2 and below, older versions of the CALIBRATE_IO procedure could produce unpredictable results. But more important was the comment that “The per process maximum throughput (MAX_PMBPS) value [might be] too large, resulting in a low DOP while running AutoDOP.” We confirmed this by allowing CALIBRATE_IO to run for about 10 minutes and checking the results. What we saw was 31K IOPS, 328 MB/s total, 334 MB/s per process, and a latency of 0. Interesting numbers, but the explain plan still said the computed DOP was 1. So what does Oracle suggest you do if you don’t like the parallelism? Cheat and set the values manually. According to the same note, start with:

Sure there’s your PARALLEL_DEGREE_LIMIT, possibly affected by your core count and PARALLEL_THREADS_PER_CPU, and of course your options for PARALLEL_DEGREE_POLICY, plus PARALLEL_MAX_SERVERS which is derived from some unspecified mix of CPU_COUNT, PARALLEL_THREADS_PER_CPU and the PGA_AGGREGATE_TARGET. But have you run DBMS_RESOURCE_MANAGER.CALIBRATE_IO? Have a look over Automatic Degree of Parallelism in 11.2.0.2 [ID 1269321.1] to see if you might be limiting yourself parallel-wise because the database has no idea what your IO subsystem is capable of. Note that they do not mention workload system stats in this context. I couldn’t find verification that these play no part in DOP, but this note seems to suggest the values in DBA_RSRC_IO_CALIBRATE play a much more significant role now. Following a link about a bug (10180307) in 11.2.0.2 and below, older versions of the CALIBRATE_IO procedure could produce unpredictable results. But more important was the comment that “The per process maximum throughput (MAX_PMBPS) value [might be] too large, resulting in a low DOP while running AutoDOP.” We confirmed this by allowing CALIBRATE_IO to run for about 10 minutes and checking the results. What we saw was 31K IOPS, 328 MB/s total, 334 MB/s per process, and a latency of 0. Interesting numbers, but the explain plan still said the computed DOP was 1. So what does Oracle suggest you do if you don’t like the parallelism? Cheat and set the values manually. According to the same note, start with:

The basic premise of In-Memory Computing is that data processed in memory is faster than data processed using storage. To understand what this means, first consider the basic elements in any computer system: CPU (Central Processing Unit), Memory, Storage and Networking. The CPU is responsible for carrying out instructions, whilst memory and storage are locations where data can be stored and retrieved. Along similar lines, networking devices allow for data to be sent or received from remote destinations.

The basic premise of In-Memory Computing is that data processed in memory is faster than data processed using storage. To understand what this means, first consider the basic elements in any computer system: CPU (Central Processing Unit), Memory, Storage and Networking. The CPU is responsible for carrying out instructions, whilst memory and storage are locations where data can be stored and retrieved. Along similar lines, networking devices allow for data to be sent or received from remote destinations. The main barriers to success in IMC are the maturity of IMC technologies, the cost of adoption and the performance impact associated with adding a persistence layer on storage. Gartner reports that IMC-enabling application infrastructure is still relatively expensive, while additional factors such as the complexity of design and implementation, as well as the new challenges associated with high availability and disaster recovery, are limiting adoption. Another significant challenge is the misperception from users that data stored using an In-Memory technology is not safe due to the volatility of DRAM. It must also be considered that as many IMC products are new to the market, many popular BI and data-manipulation tools are yet to add support for their use.

The main barriers to success in IMC are the maturity of IMC technologies, the cost of adoption and the performance impact associated with adding a persistence layer on storage. Gartner reports that IMC-enabling application infrastructure is still relatively expensive, while additional factors such as the complexity of design and implementation, as well as the new challenges associated with high availability and disaster recovery, are limiting adoption. Another significant challenge is the misperception from users that data stored using an In-Memory technology is not safe due to the volatility of DRAM. It must also be considered that as many IMC products are new to the market, many popular BI and data-manipulation tools are yet to add support for their use. Not a real post – but a recommendation…

Not a real post – but a recommendation…

IMDB Fundamental Requirement #1:

IMDB Fundamental Requirement #1: