Why Kaminario?

December 15, 2015 Leave a comment

This summer I made the decision to leave my previous employer and join another vendor in the All Flash Array space – a company called Kaminario. A lot of people have been in touch to ask me about this, so I thought I’d answer the question here… Why Kaminario?

To answer the question, we first need to look at where the All Flash industry finds itself today…

The Path To Flash Adoption

We all know that disk-based storage has been struggling to deliver to the enterprise for many years now. And most of us are aware that flash memory is the technology most suitably placed to take over the mantle as the storage medium of choice. However, even keeping in mind the typical five-to-seven year refresh cycle for enterprise storage platforms, the journey to adopt flash in the enterprise data centre has been slower than some might have expected. Why?

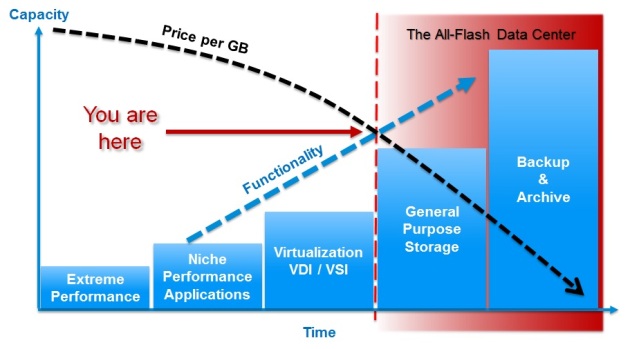

There are three reasons, in my view. The first two are pretty obvious: cost and functionality. I’ll cover the third in another post – but cost and functionality have changed drastically over the four phases of flash:

Phase One: Extreme Performance

The early days of enterprise flash storage were pioneered by the likes of FusionIO with their PCIe flash cards. These things sold for a $/GB price that would seem obscene in today’s AFA marketplace – and (at least initially)  they had almost no functionality in terms of thin provisioning, replication, snapshots, data reduction technologies and so on. They weren’t even shared storage! They were just fast blobs of flash that you could stick right inside your server to get performance which, at the time, seemed insanely fast – think <250 microseconds of latency.

they had almost no functionality in terms of thin provisioning, replication, snapshots, data reduction technologies and so on. They weren’t even shared storage! They were just fast blobs of flash that you could stick right inside your server to get performance which, at the time, seemed insanely fast – think <250 microseconds of latency.

This meant they were only really suitable for extreme performance requirements, where the cost and complexity was justified by the resultant improvement to the application.

Phase Two: Niche Performance Applications

The next step on the path to flash adoption was the introduction of flash as shared storage (i.e. SAN). These were the first All Flash Arrays, a marketplace pioneered by Violin Memory (my former employer) and Texas Memory Systems (subsequently acquired by IBM). The fact that they were shared allowed a larger number of applications to be migrated to flash, but they were still very much used as a niche performance play due to a lack of features such as data reduction, replication etc.

The next step on the path to flash adoption was the introduction of flash as shared storage (i.e. SAN). These were the first All Flash Arrays, a marketplace pioneered by Violin Memory (my former employer) and Texas Memory Systems (subsequently acquired by IBM). The fact that they were shared allowed a larger number of applications to be migrated to flash, but they were still very much used as a niche performance play due to a lack of features such as data reduction, replication etc.

Phase Three: Virtualization for Servers and Desktops

The third phase was driven by the introduction of a very important feature: data reduction.  By implementing deduplication and/or compression – therefore massively reducing the effective price in $/GB – a couple of new entrants to the AFA space were able to redefine the marketplace and leave the pioneering AFA vendors floundering. These new players were Pure Storage and EMC with its XtremIO system – and they were able to create and attack an entirely new market: virtualization. Initially they went after Virtual Desktop Infrastructure projects, which have lots of duplicate data and create lots of IOPS, but in time the market for Virtual Server Infrastructure (i.e. VMware, Hyper-V, Xen etc) became a target too.

By implementing deduplication and/or compression – therefore massively reducing the effective price in $/GB – a couple of new entrants to the AFA space were able to redefine the marketplace and leave the pioneering AFA vendors floundering. These new players were Pure Storage and EMC with its XtremIO system – and they were able to create and attack an entirely new market: virtualization. Initially they went after Virtual Desktop Infrastructure projects, which have lots of duplicate data and create lots of IOPS, but in time the market for Virtual Server Infrastructure (i.e. VMware, Hyper-V, Xen etc) became a target too.

Phase Four: General Purpose Storage

This is where we are now – or at least, it’s where we’ve just arrived. The price of flash storage has consistently dropped as the technology has advanced, while almost all of the features and functionality originally found on enterprise-class disk arrays are now available on AFAs. We’re finally at the point now where, with some caveats, customers are either moving to or planning the wholesale replacement of their general purpose disk arrays with All Flash. Indeed, with the constant evolution of NAND flash technology it’s no longer fanciful to believe that Backup and Archive workloads could also move to flash…

We are now at the inflection point where, thanks to the combination of data reduction features and constantly-evolving NAND flash development, the cost of All Flash storage has fallen as low as enterprise disk storage while delivering all the functionality required to replace disk entirely. We call this concept the All-Flash Data Centre.

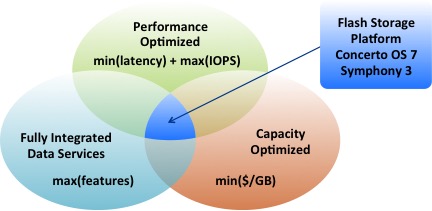

So to answer the question at the start of this post, I have joined Kaminario because I believe they are ideally placed, architecturally and commercially, to lead the adoption of this new phase of flash storage – a technology that I fundamentally believe in.

Making The All-Flash Data Centre A Reality

I mentioned earlier that NAND flash is changing and evolving all the time. It reminds me a little of smartphones – you buy the latest and greatest model only for it to become yesterday’s news almost before you’ve worked out how to use it. But the typical refresh cycle of a smartphone is one-to-two years, while for enterprise storage it’s five-to-seven. That’s a long time to risk an investment in evolving technology.

I mentioned earlier that NAND flash is changing and evolving all the time. It reminds me a little of smartphones – you buy the latest and greatest model only for it to become yesterday’s news almost before you’ve worked out how to use it. But the typical refresh cycle of a smartphone is one-to-two years, while for enterprise storage it’s five-to-seven. That’s a long time to risk an investment in evolving technology.

Kaminario’s K2 All Flash Array is based on its SPEAR architecture. Essentially, what Kaminario has created is a high-performance, scalable framework for taking memory and presenting it as enterprise-class storage – with all  the resilience, functionality and performance you would expect. When the company was first founded this memory was just that: DRAM. But since NAND flash became economically viable, Kaminario has been using flash – and the architecture is agile enough to adopt whichever technology makes the most sense in the future.

the resilience, functionality and performance you would expect. When the company was first founded this memory was just that: DRAM. But since NAND flash became economically viable, Kaminario has been using flash – and the architecture is agile enough to adopt whichever technology makes the most sense in the future.

As an example of this agility, Kaminario was the first AFA vendor to adopt 3D NAND technology and the first to adopt 3D TLC. This obviously allows a major competitive advantage when it comes to providing the most cost-efficient All Flash Array. But what really drew me to Kaminario was their decision to allow customers to integrate future hardware (such as new types of flash) into their existing arrays rather than making them migrate to a new product as is typical in the industry. By protecting customers’ investments, Kaminario is taking some of the risk out of moving to an AFA solution. It calls this programme the Perpetual Array.

In addition to this, Kaminario has a unique ability to offer both scale out and scale up architecture (scalability is something I will discuss further in my Storage for DBAs series soon) and to deliver workload agnostic performance… all technical features that deliver real business value. But those are for discussion another day.

For today the message is simple: Kaminario is making the All Flash Data Centre a reality.. and I want to be here to help customers make that happen.

The raw capacity of a flash storage product is the sum of the available capacity of each and every flash chip on which data can be stored. Imagine an

The raw capacity of a flash storage product is the sum of the available capacity of each and every flash chip on which data can be stored. Imagine an  Possibly one of the most abused terms in storage, usable capacity (also frequently called net capacity) is what you have left after taking raw capacity and removing the space set aside for system use, RAID parity, over-provisioning (i.e. headroom for garbage collection) and so on. It is guaranteed capacity, meaning you can be certain that you can store this amount of data regardless of what the data looks like.

Possibly one of the most abused terms in storage, usable capacity (also frequently called net capacity) is what you have left after taking raw capacity and removing the space set aside for system use, RAID parity, over-provisioning (i.e. headroom for garbage collection) and so on. It is guaranteed capacity, meaning you can be certain that you can store this amount of data regardless of what the data looks like. The effective capacity of a storage system is the amount of data you could theoretically store on it in certain conditions. These conditions are assumptions, such as “my data will reduce by a factor of x:1”. There is much danger here. The assumptions are almost always related to the ability of a dataset to reduce in some way (i.e. compress, dedupe etc) – and that cannot be known until the data is actually loaded. What’s more, data changes… as does its ability to reduce.

The effective capacity of a storage system is the amount of data you could theoretically store on it in certain conditions. These conditions are assumptions, such as “my data will reduce by a factor of x:1”. There is much danger here. The assumptions are almost always related to the ability of a dataset to reduce in some way (i.e. compress, dedupe etc) – and that cannot be known until the data is actually loaded. What’s more, data changes… as does its ability to reduce.

Did you make it through that last paragraph? Perhaps, like me, you find the HFA category “all-flash” confusingly named given the top-level category of “all flash arrays”? Then let’s go and see what Gartner says.

Did you make it through that last paragraph? Perhaps, like me, you find the HFA category “all-flash” confusingly named given the top-level category of “all flash arrays”? Then let’s go and see what Gartner says.

Flash for DBAs: A new technology is sweeping the world of storage. Flash, a type of non-volatile memory, is gradually replacing hard disk drives. It began in consumer electronic devices such as phones, cameras and tablets – then it moved into laptops in the form of SSDs. And now the last fortress of hard drives us under attack: the data centre. Find out what flash memory is, what it means for your Oracle databases and how to use it to give you performance that was never possible in the old days of disk…

Flash for DBAs: A new technology is sweeping the world of storage. Flash, a type of non-volatile memory, is gradually replacing hard disk drives. It began in consumer electronic devices such as phones, cameras and tablets – then it moved into laptops in the form of SSDs. And now the last fortress of hard drives us under attack: the data centre. Find out what flash memory is, what it means for your Oracle databases and how to use it to give you performance that was never possible in the old days of disk…